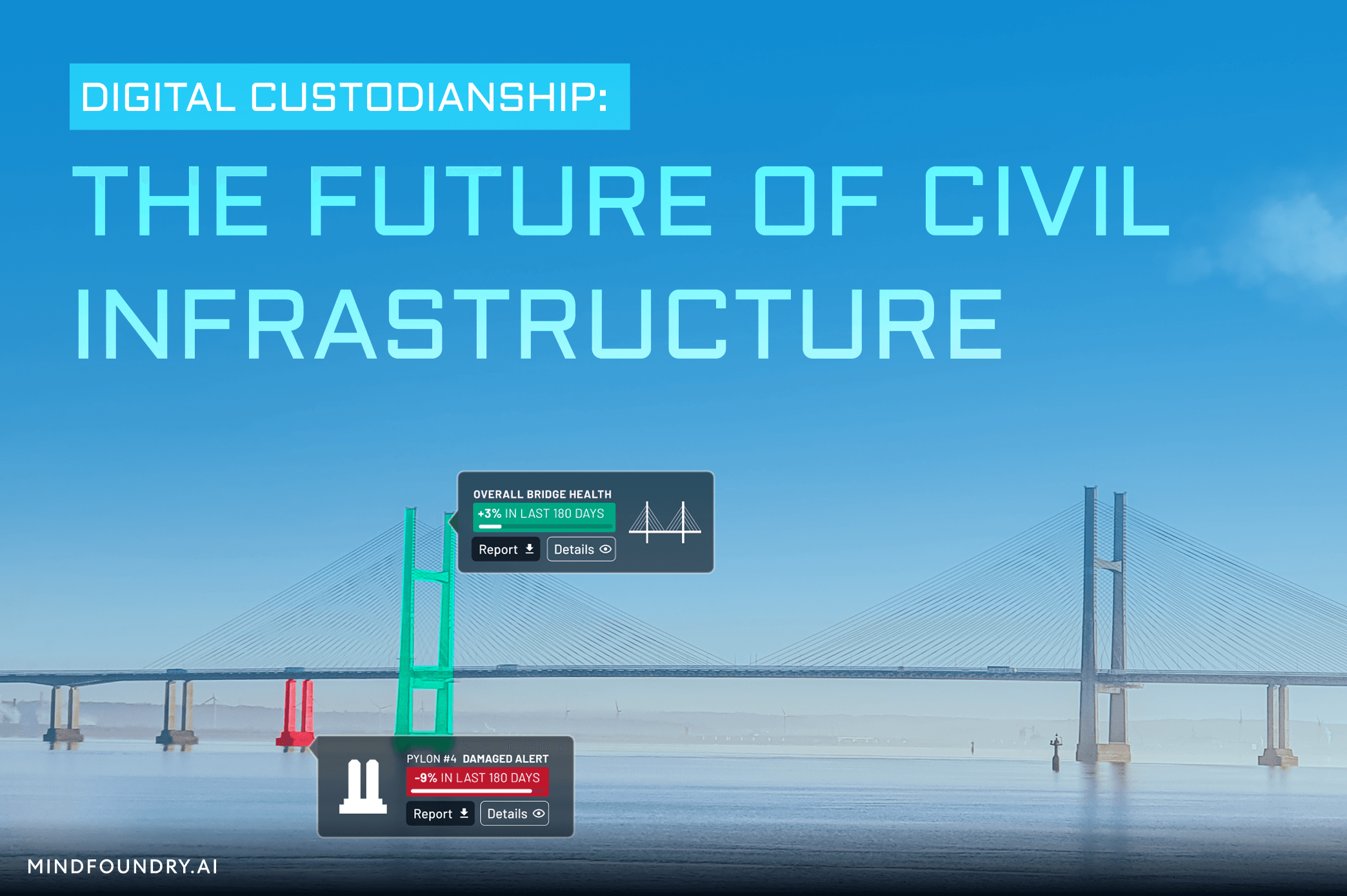

Digital Custodianship: The Future of Civil Infrastructure

Our civil infrastructure is entering an accelerated phase of deterioration, and numerous challenges are hindering effective infrastructure...

Insurance is an incredibly competitive industry. The sheer number of customers, competitors, and small margins make for an adversarial landscape. Pricing is like a microcosm of the insurance industry, with every insurer jostling for the attention of increasingly savvy customers demanding cheaper, fairer quotes. It is one of any insurer's most mature, competitive, and important functional areas. In markets with price aggregation, mistakes are punished quickly and harshly as billions of quotes flow through the system. Against this backdrop, AI has become an essential tool in every insurer’s pricing armoury. But just as any tool must be used properly to do its job, AI must be adopted with effective governance to create value without causing unforeseen future damage.

How AI Has Changed Insurance Pricing

Before AI, most insurance premiums were set using a fixed-commission-plus model that determined a price by assessing risk and adding an extra percentage to pay the broker, cover the distribution costs, and make a profit. This worked adequately for many years until the rise of comparison sites required an added dimension. Since then, to be competitive has meant not only predicting the best price for your customer but also anticipating market factors like the price that all your competitors will offer. Being right is no longer enough. You have to be more right. More personalised. Smarter. And faster.

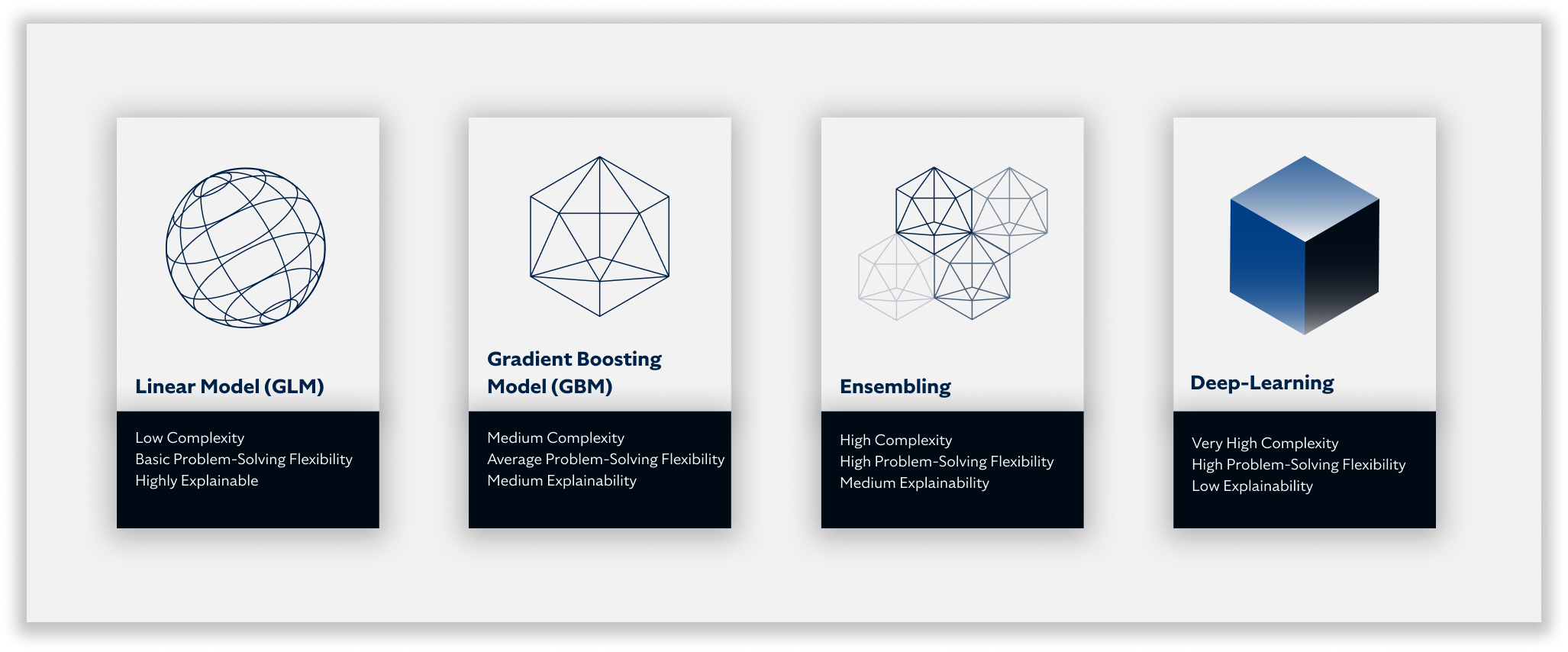

AI promises progress towards these desired outcomes, and its role in insurance has grown and evolved. Linear models (GLMs) have given way to gradient boosting (GBMs), models have been chained together in increasingly complex pipelines, and once explainable, transparent models have become increasingly opaque, inscrutable, black-box ones. This has coincided with the looming spectre of tightening regulation and the subsequent need for responsible AI governance in the UK.

What is AI Governance?

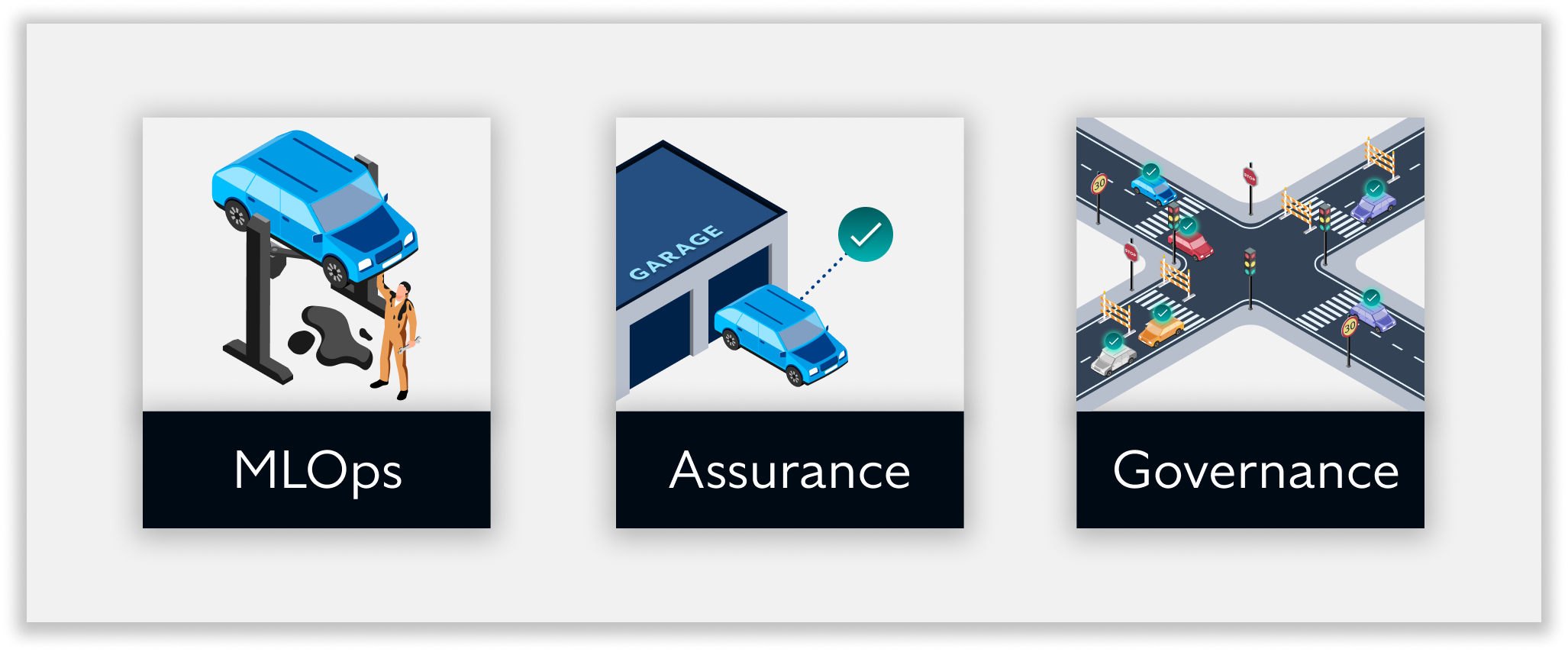

In plain terms, AI governance helps validate that things are going well with your AI. It’s a set of principles or processes that ensure your AI is developed and deployed in a way that is responsible, ethical, and aligned with business strategies and current regulations. Unlike MLOps, which is more focused on the functionality of AI systems from an engineering perspective, or assurance, which helps measure and judge performance, AI governance is fundamentally about assessing the full implications of AI in operation and the potential impact of these systems on end-users, customers, and the organisation as a whole, both at the point of deployment and on an ongoing basis.

If operationalising AI were like driving a car, MLOps would be the engineers, mechanics, fuelling and road infrastructure that “host” a car and ensure it travels efficiently and smoothly; assurance would be the MOT certifying officially that the car is technically sound. Governance would be the legislation and management of processes around driving tests, signoff for examiners, MOTs and services. In short, governance ensures that the right people are in the right place to continually test and assure that every car and driver on the road can drive together safely and responsibly.

AI governance necessitates the involvement of multiple stakeholders who must ensure that an AI system adheres to their specific policy of interest - data science correctness, pricing approach, compliance interpretation, and more. As AI progresses from being an innovation project towards a reliable and accountable member of your team, model governance becomes essential for any organisation looking to operationalise AI in a safe, responsible, sensible way.

Governance in Insurance Pricing is About to Get Real

Last year, 73% of adults in the UK used a financial comparison website to pit insurers against each other and get the best price for their needs delivered back to them in mere seconds. It’s a tough game to win and requires pricing models that extract every ounce of insight from the customer’s data whilst factoring in their competitors and the quotes they’re likely to offer to provide a quote optimised from every angle consistently and instantaneously. But generating the winning price is only the first part.

Explaining how and why it did that is the second part, and it’s equally important. This is where model governance comes into play. Insurers need to consider the impact of their models on their customers and whether these models adhere to current or future regulations. This is especially relevant to insurers in the UK, considering that the Consumer Duty will come into effect on the 31st of July this year. It is being brought in and enforced by the Financial Conduct Authority (FCA) and will cover “larger fixed firms with a dedicated FCA supervision team, who primarily operate in retail financial services markets.” Insurance companies will fall under the duty’s jurisdiction, and the implications are far-reaching and significant.

.png?width=820&height=261&name=FCA%20Timeline%20(1).png)

One of the ideas explicitly mentioned is “price and value”. For insurers, it means they must satisfy customers' expectations of fair value when paying for insurance. It’s not just about offering cheaper insurance because even offering lower premiums can lead a customer to question whether they were getting fair value in the first place. Suppose an insurer fails to answer these questions. In that case, the FCA will work with the Financial Ombudsman Service (FOS) to investigate customer complaints to establish whether an insurer is keeping to the regulations.

In any case, an insurer must provide evidence that their pricing models are making recommendations fairly based on unbiased data and unprotected customer characteristics. The rules clearly state that “a key part of the Duty is that firms assess, test, understand and evidence the outcomes their customers are receiving. Without this, it will be impossible for firms to know they are meeting the requirements”. On an individual model level, insurers need to ensure that their pricing models have sufficient levels of explainability in order to understand and thus communicate their outputs to internal teams, customers, and regulators.

The Cost of Ineffective AI Governance

True AI governance is about more than just managing individual models. A large insurer may already have hundreds of models in operation, from pricing to claims handling and fraud detection, and most intend to continue scaling their AI adoption. As the Consumer Duty deadline approaches, the consequences of failing to enact proper AI governance for all your models will become a stark reality.

Regulatory Action

As the Information Commissioner’s Office notes, “If the rules are broken, organisations risk formal action, including mandatory audits, orders to cease processing personal data, and fines.” If one model operates in a way that violates these rules, your entire business is exposed to these potential consequences. In 2022, the FCA handed Santander UK Plc a fine of £107 million after they repeatedly “failed to properly oversee and manage its anti-money-laundering systems”. This was one of 26 fines handed out by the FCA in 2022, the first year of their three-year strategy to become more innovative, assertive, and adaptive.

As we enter 2024 and get closer to the final year of their strategy, we expect the FCA to act faster and address more ongoing risks. In their own words, “We will more readily make use of our formal powers to reduce and prevent harm, accepting a higher risk of legal challenge. We will adapt our response to the circumstances we face, targeting the requirements we impose on firms so they match the risks they pose. “ The FCA goes on to state ”We will focus not only on checking whether firms comply with disclosure rules but also on whether consumers understand the products they buy. We will expect to see evidence of this.” Insurers must be prepared.

Fines for Lack of Transparency in 2024

Around the world, more than 37 countries have proposed AI-related legal frameworks to address public concerns for AI safety and governance. Many of these, like the EU AI Act or the United States executive order for safe AI, won’t manifest as laws at the highest levels for another year or two, but we don’t have to wait to see what fines for lack of transparency will look like. In 2021, regulators in Italy fined Deliveroo €2.5 million for a lack of transparency in the way they collected data and used algorithms to manage riders. Insurers in the UK haven’t been fined (yet), but they’re getting close.

In September 2023, the UK information commissioner warned the UK Government that it risked contempt of court over the Department for Work and Pension (DWP)’s handling of requests for transparency over its use of AI to vet welfare claims and the “veil of secrecy” over the AI system it had used. This was prompted by the Guardian issuing freedom of information requests to find out more details about the data and algorithms that were being used to deny certain claims, as well as the results of a “fairness analysis.” The DWP refused to provide the requested information, arguing that providing information could help fraudsters. As regulations kick in, laws get created, and the public builds an appetite for more and more transparency, we expect to see many more of these fines in 2024.

Falling Behind the Competition

Insurers who are ahead of the game in AI governance will position themselves as offering fairer pricing offerings to their customers because they can communicate the reasoning behind every pricing decision. As the ICO notes, “If you don’t provide people with explanations of AI-assisted decisions you make about them, you risk being left behind by organisations that do”. In insurance pricing, where enough marginal degrees of optimisation can lead to entire market dominance, being ahead of the curve in governance terms can be the dealbreaker, ensuring profitability and compliance as your AI scales. Conversely, with the ferocious competition and the speed of change in the industry, those who fall behind risk being left behind.

Future Success Means Immediate Action

Some insurers are already waking up to the growing importance of AI governance. They are taking steps to begin the process of putting in place structures to build out capability, but the lack of insurance-specific software solutions on the market means they’re forced to attempt everything manually in-house, a time-consuming and resource-intensive process that actually hinders their ability to scale AI properly. Nevertheless, as governance becomes more and more essential in insurance, automated solutions that accelerate the rewards for those who have taken these initial steps will soon become available.

AI regulations around the world are no longer just ideas and proposals. Changes affecting how every organisation builds, deploys, and uses AI are already in motion. Governance is the protection that every insurer will need in order to scale their AI in a way that can adapt to these changes whilst still delivering significant value to the business. But as with regulation, the time to talk about governance has passed, and the time for action has arrived.

Our civil infrastructure is entering an accelerated phase of deterioration, and numerous challenges are hindering effective infrastructure...

At the edge, every second counts, and every decision matters. To gain and hold an advantage, Defence must design AI and Machine Learning for where...

An uninvited buzz of drones in the air is forcing airports worldwide to rethink their approach to security. The implications are serious, impacting...