Insuring Against AI Risk: An interview with Mike Osborne

When used by malicious actors or without considerations for transparency and responsibility, AI poses significant risks. Mind Foundry is working with...

Despite the ocean covering over 70% of the Earth’s surface, we have only physically explored 5% of it. Part of the reason why so much of it is left unexplored is because 90% of the oceans' depths exist in perpetual darkness. At a depth of only 1 metre, just 45% of light permeates through. At 10 metres, that number is down to 16%. Once you get 1000 metres down, all is darkness. But where light cannot go, something else can... Sound.

Toothed whales, like dolphins, belugas, and sperm whales, use their biological sonar system to navigate and locate food underwater by emitting high-frequency pulses of sound. Over the course of millions of years, they’ve evolved brains and specialised organs that allow them to see sound, similarly to how we see light. Sound Navigation And Ranging, or Sonar, allows a whale to gain a three-dimensional perspective of their environment and detect minute objects from great distances. They can even discern their shape and composition.

What could we do if we had the same capability?

The Evolution of Sonar

Sonar’s origins go as far back as the 19th century. In 1822, on Lake Geneva, physicist Jean-Daniel Colladon and mathematician Charles-Francois Sturm made the first recorded attempt to determine the speed of sound through water. In their experiment, one of the men sat in a boat and used gunpowder to simultaneously create a visual spark and strike an underwater bell hanging a few meters beneath the water's surface. The sound of the bell and flash from the gunpowder were observed 10 miles away by the other man in the second boat, who listened to the underwater sounds through a tube. By measuring the time between the gunpowder flash and the sound reaching the second boat, they were able to calculate with impressive accuracy the speed of sound for water at a temperature

of 8° C.

Illustration of Colladen and Sturm's experiment on Lake Geneva

Illustration of Colladen and Sturm's experiment on Lake Geneva

In 1906, Lewis Nixon invented the first sonar-type listening device to detect icebergs. The need for this technology was brought into sharp focus in 1912 when the Titanic sank after striking an iceberg. A few months after the disaster, an English scientist, Lewis Richardson, filed a patent for the echolocation of icebergs in water.

Following the breakout of World War 1 in 1914, it soon became apparent that submarines would pose a significant threat to any naval operations. In 1915, French scientist Paul Langévin invented the first sonar-type technique for detecting submarines, called a "piezoelectric quartz transducer". Although his invention arrived too late to make a significant difference during the war, his theories and research would go on to be hugely influential in the development of sonar technology.

The pressing need for more advanced sonar technology was thrown into stark relief by the advent of submarine warfare in WW1. Even though German submarines, or U-boats, were fairly rudimentary by modern standards, the devastation they caused was staggering. By the end of the war, Germany had built 334 U-boats, and they had destroyed over 10 million tons worth of Allied vessels. It became immediately apparent that submarines would become a central component of warfare for years to come, and so work to devise tools and techniques to detect, locate, and neutralise this new threat accelerated to new levels.

When war broke out again in 1939, submarines would once more play a pivotal role in the struggle for naval supremacy, threatening Allied shipping lanes and striking fear in the minds of sailors and the public alike. Over the course of the war, they sank 2,603 merchant ships along with 175 other naval vessels. When reflecting on this in his memoirs years later, Winston Churchill would say, “The only thing that ever really frightened me during the war was the U-boat peril”. Although travelling in convoys with support from the air proved effective at mitigating the threat of U-boats, it was the advances in sonar and echolocation technology that enabled the Allies to detect these threats, making anti-submarine warfare one of WW2’s enduring military legacies.

Three Obstacles to Sonar Technology

Sonar can be broadly split into two types: Active sonar and passive sonar.

Active sonar systems emit a pulse of sound into the water and analyse the returning echoes to detect and locate hidden objects.

Passive sonar systems wait patiently, silently monitoring underwater sounds from objects in the vicinity. This allows for the detection and tracking of vessels and other marine activity.

Sonar technology today can be found in places like ship hulls, seabed systems, or towed arrays. These sonar sensors and systems collect valuable streams of acoustic data, ready for human operators to interpret. However, this is where things get challenging. Today’s human operators typically face three major problems.

Environmental Complexity

Environmental Complexity

1. Environmental Complexity

The underwater environment is complex. Sounds vary in intensity, propagate in non-linear pathways, and are affected by temperature, salinity, and other variables like currents, seabed material, and weather. Sounds also arrive from all directions, near and far; the sounds of seismic activity or certain whale calls can be heard from hundreds of miles away. The resulting signals detected by any sensor are a combination of many different sources, from fishing vessels and earthquakes to organic life and other signals of interest.

These complex audio traces contain all these components simultaneously, some of which will be incomplete or arrive at varying times as they travel across different sound propagation pathways. The potential to unlock insights from this complexity is huge. But the sobering reality is that all of this high-quality, 3-dimensional data gets flattened into two dimensions and displayed on a screen, making it incredibly difficult to know one thing from the other.

Two objects that are actually quite different may look the same.

Sometimes, the problem is that there isn’t enough information to create a clear picture. But more information isn’t always the solution. And this is the second problem.

Cognitive Load

Cognitive Load

2. Cognitive Load

Cognitive load refers to the mental effort required to process and understand information clearly and effectively when systems are too complex or overwhelming. As Don Norman, a pioneer in Human-Computer Interaction, emphasises in The Design of Everyday Things, technology should align with human cognitive strengths and compensate for weaknesses like cognitive load.

In sonar technology, this is a significant issue because the environments in which sonar data is interpreted are often stressful, high-stakes, and require rapid decision-making. Adding massive volumes of data—each with differing formats and levels of relevance—compounds the problem. Instead of aiding the operator, poorly designed systems can inadvertently overload them, increasing the likelihood of errors or delays in critical moments. Drawing from principles of human-centric design, designing sonar systems that reduce cognitive load and support intuitive interaction is essential to ensure that human operators can make clear, informed decisions even under pressure.

Evolving Threats

Evolving Threats

3. Evolving Threats

Dolphins learned to recognise the sound signature of their predators (mostly sharks) thousands of years ago, and the sounds of these sharks are static and predictable: the shark's sound never changes. However, the sounds of the objects that a sonar operator wants to identify are rapidly changing. Evolving. Year after year. Keeping track of what these changes are and how they alter acoustic signatures of interest is a challenging task. It gets even more challenging when you consider the fact that as the ocean gets louder and louder with myriad mechanical objects, the ones that really matter to a sonar operator are getting quieter and quieter and quieter….

Some now radiate so little noise that it’s almost impossible to hear them. (But AI can.)

How can AI help overcome these challenges?

What if humans could use AI for sonar processing to help them reduce environmental complexity, manage cognitive load, and stay ahead of evolving threats? What if we could use sonar as intuitively and effectively as the animals that have been using it for hundreds of thousands of years?

Let’s unleash human-AI collaboration that brings together the best aspects of human and machine intelligence to achieve more than either could on their own. By combining traditional approaches with AI and directly encoding human understanding of the maritime domain, we can simplify the underwater problem space and reduce training data requirements. This enables systems to make more sense of complex signal types than we can with conventional techniques alone. By deconstructing inputs to their basic components and recombining them as outputs that are informed by human domain expertise, data can be harnessed far more efficiently, giving sonar operators a clearer picture of the world around them and enabling faster and better decision-making.

Let’s reduce the cognitive burden on sonar operators by enabling better prioritisation of already limited human focus and time. When AI is combined with domain expertise and carefully calibrated to an operational problem, it can swiftly process data to amplify signals and, more importantly, reduce noise. Instead of trying to find the needle in a vast haystack, let’s use AI to reduce the size of the haystack so that a human can find the needle faster.

Finally, lets stay ahead of evolving threats by designing systems that facilitate continuous learning and improvement and are built to be deployed in the most challenging environments. All of this is possible with an understanding of AI’s capabilities, the right strategies and skills to integrate AI systems and an AI partner that understands your problem space and the measures required to deliver impact within it.

Click below to watch the full short film, Seeing With Sound.👇

When used by malicious actors or without considerations for transparency and responsibility, AI poses significant risks. Mind Foundry is working with...

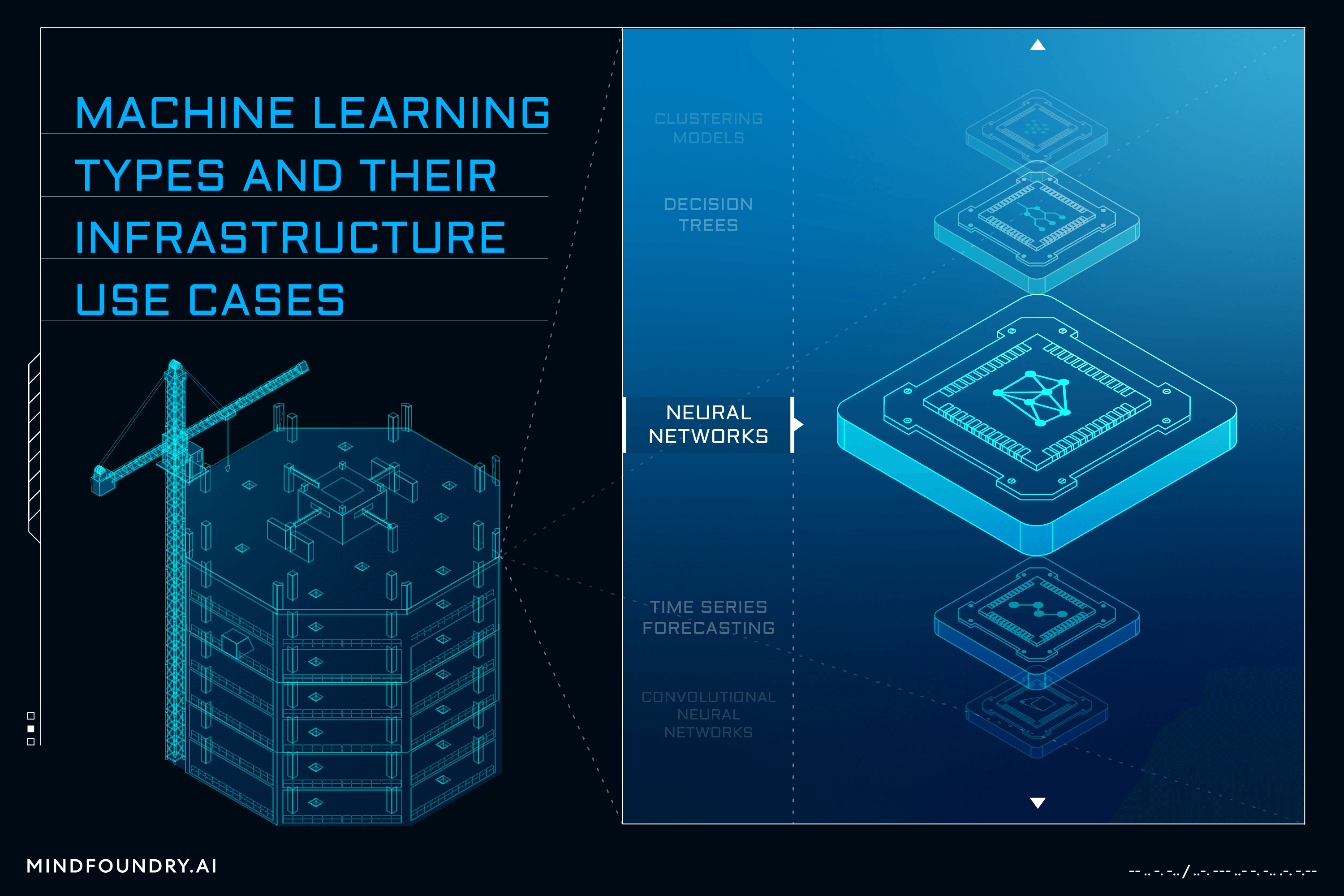

AI and Machine learning is a complex field with numerous models and varied techniques. Understanding these different types and the problems that each...

While the technical aspects of an AI system are important in Defence and National Security, understanding and addressing AI business considerations...