Insuring Against AI Risk: An interview with Mike Osborne

When used by malicious actors or without considerations for transparency and responsibility, AI poses significant risks. Mind Foundry is working with...

5 min read

Nick Sherman

:

Mar 14, 2025 11:05:47 AM

Nick Sherman

:

Mar 14, 2025 11:05:47 AM

As AI becomes more ubiquitous across our society, it has become increasingly clear that the technology has untold potential to positively impact our lives, from smaller, individual-level improvements like democratising access to software engineering and virtual personal assistants to more significant advancements like combatting climate change and searching for ways to cure cancer. Nevertheless, as we learn more about AI, it has become equally clear that these benefits come with significant risks and potential for harm when used irresponsibly, and as investment in AI continues to ramp up across the globe, avenues must be explored to protect us from these harms. This is where insurance could play a pivotal role.

Mind Foundry, the Aioi R&D Lab - Oxford, the Oxford Martin School initiative on AI governance at the University of Oxford, and partners in the US at the Institute for Law and AI are researching the potential for insurance to help protect organisations and individuals from some of AI’s most significant risks and harms.

Professor Mike Osborne is a co-founder and Co-Chief Science Officer (CSO) at Mind Foundry, an Advisor to the Aioi R&D Lab - Oxford, a Co-Director of the Oxford Martin School AI Governance Initiative, and one of the leading thinkers on AI Safety and AI Risk. We filmed an interview with him to learn more about his project, a research paper titled “Insuring Emerging Risks from AI”, as well as his wider areas of research. The longer, written version of that interview has been edited below.

Mike Osborne: The project's catalyst was a debate about the various harms that AI might introduce into our society, particularly given the increasingly rapid pace of progress within the field. A core goal of the project was to identify a taxonomy of risks emerging from AI, and there are many ways to think about those risks. For instance, there are risks from the malicious use of AI models, but there are also risks from AI models failing or underperforming, including hallucinating.

Many small and medium-sized firms today are really interested in investing in new AI technologies but are held back by the risks inherent in that investment. Those risks might include, firstly, the risk of the technology just not working as they would hope. There are also risks from AI hallucinations which can impact a firm’s reputation or brand. For example, if an AI hallucinates something harmful or inappropriate in its AI-generated response to a customer then the customer may see the hallucinations as indicative of the failures of the firm that delivered it. What these firms really want is peace of mind. They need some means of assuring themselves that even in those worst-case scenarios of their models misfiring and hallucinating, the harms are not too great.

Mike Osborne: One form of harm that is very apparent but doesn’t receive as much media coverage is the cybersecurity risk associated with AI. Large language models play an important role here as they have made it much easier to launch cyber attacks. This poses new means for malicious actors to explore code bases, identify vulnerabilities, and launch new forms of attack at scales that haven't been possible in the past.

Another avenue of exploration was the risk posed by the introduction of autonomous vehicles (AVs). As AVs achieve prominence on our streets, society will be forced to think in new ways about how those vehicles should be insured and how the harms from accidents should be treated under the law.

Our research also considered the uncertain potential and risks of AI agents, which many cutting-edge firms are now investigating for the automation of their work.

Given those potential risks and harms, we decided to focus our research by asking two pivotal questions. The first was, “How might insurance firms address the harms of AI?" The second continued where the first left off and asked, “How might insurance firms be a key lever for governments in deciding how AI should be regulated?”

We determined that insurance could be a core means of addressing those new risks.

Mike Osborne: What insurance could offer is what it always offers. Security. Peace of mind in a world of uncertain outcomes. Reduced risk. Individual companies who don’t fully understand the landscape of AI-driven threats and may be hesitant to invest in digital transformation could look to their insurance partner to reduce that risk. In the uncertain landscape of AI risk, we find ourselves in today, this financial security from a trusted insurance partner could lead to many future benefits and allow the full potential of AI to responsibly and safely transform our lives, our businesses, and our world.

Of course, counteracting these new threats is difficult because they are constantly emerging and rapidly changing. Some firms may be big enough to absorb these risks themselves. They can self-insure. But for small and medium firms, these new insurance policies might be necessary to ensure that they can take on those investments in powerful new technologies without worrying about those technologies leading to deep cuts into their bottom lines.

In short, we think insurance will let you share risk. It brings the winners and losers closer together, propagating AI's benefits across the economy and society.

Mike Osborne: 2024 was the year of AI regulation, with policymakers worldwide giving deep thought to how to regulate this rapidly developing technology. Nevertheless, AI regulation today remains fragmentary and incomplete, and insurers could have a pivotal role in ensuring that the harms from AI will be mitigated and AI's benefits are broadly shared.

The reason why insurance for AI in a regulatory context could be essential is because not all the harms from AI are within the purview of regulators. For instance, firms might be worried about the harm that an investment in AI will do to their bottom line if it underperforms, but that's not something that the law will ultimately govern. However, those harms are important because they might prevent firms from investing in these transformational technologies, which could unlock really powerful new products and services that will deliver real flourishing to our societies. In other words, insurance can tackle harms that the law can't.

Furthermore, insurance can give new levers for the law to act. For example, when it comes to driving, we're all accustomed to being forced by law to have mandatory insurance to safeguard the potential victims of accidents from our vehicles. Having similar mandatory insurance for the firms developing these risky but powerful new AI models would mean that the firms would have a really powerful incentive to ensure that those models are developed and implemented robustly, safely and securely because if they don't invest in the responsible development of their models, they'll have to pay more for their insurance. Of course, mandatory insurance also ensures some comfort for any victims of those models in the event of negative outcomes emerging from a model’s outputs.

Mike Osborne: AI and insurance, and the relationship between the two, are likely to experience significant changes in the coming years, and rapid changes in AI will have profound impacts on the insurance industry. The rise of autonomous vehicles will likely transform auto insurance, perhaps shifting from drivers to manufacturers, with liability mostly assigned to the faults of those manufacturers. But alongside that potential shrinking of the auto insurance market, new insurance markets will open up. For instance, in the insurance against the cyber security threats that AI might pose, both as a victim and a threat. There could also be new products for the insurance of AI agents, which many firms are investigating today.

AI's future is uncertain, but we know that much will have to be done to safeguard us against some of the worst failures that AI might introduce. In safeguarding us against failure, we have a really powerful tool in insurance. The future of AI may, in fact, depend less on the developer’s code and more on the actuary’s spreadsheet.

When used by malicious actors or without considerations for transparency and responsibility, AI poses significant risks. Mind Foundry is working with...

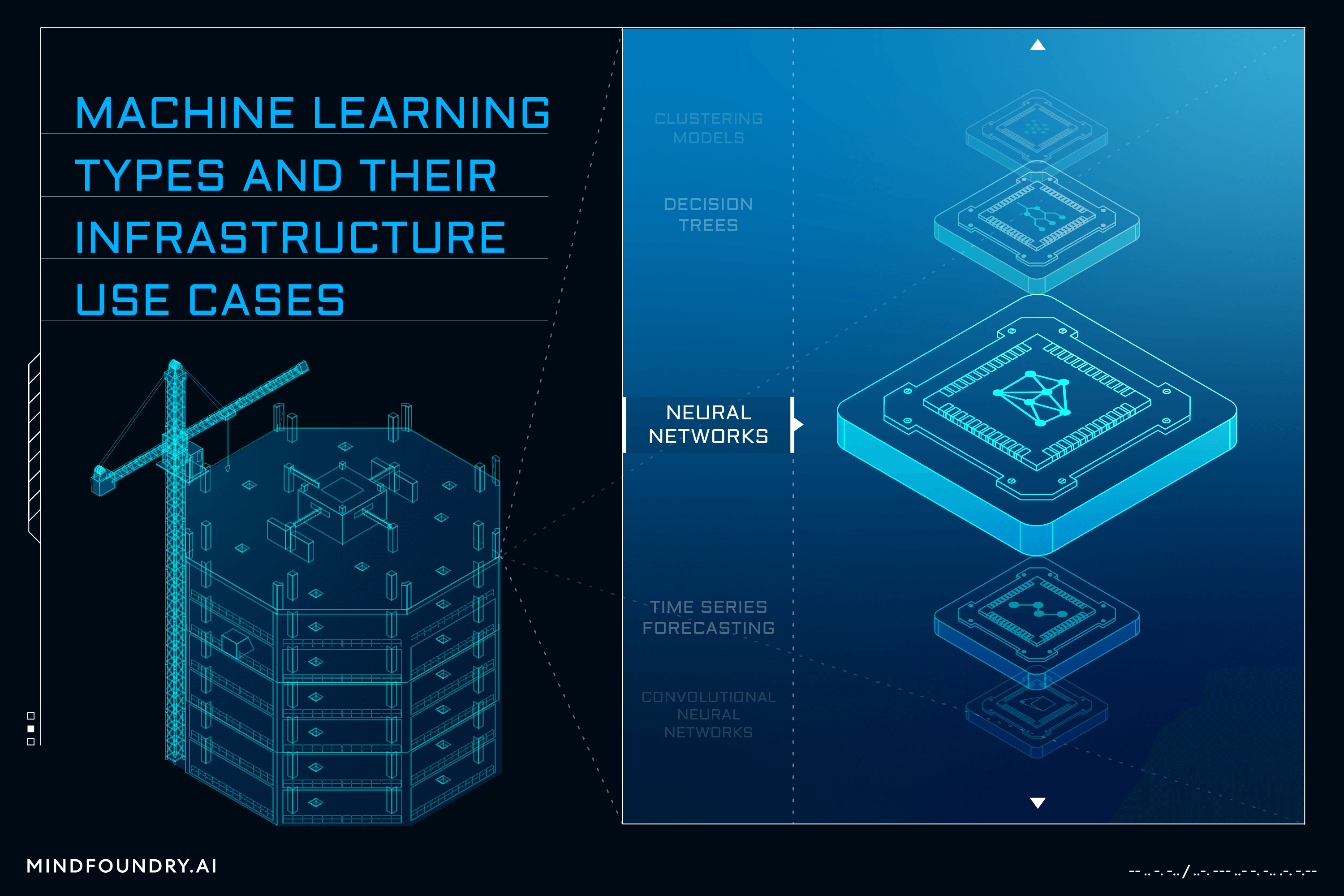

AI and Machine learning is a complex field with numerous models and varied techniques. Understanding these different types and the problems that each...

While the technical aspects of an AI system are important in Defence and National Security, understanding and addressing AI business considerations...