Insuring Against AI Risk: An interview with Mike Osborne

When used by malicious actors or without considerations for transparency and responsibility, AI poses significant risks. Mind Foundry is working with...

6 min read

Mind Foundry

:

Jun 1, 2023 12:13:44 PM

Generative AI (GAI) has been firmly at the centre of discussion ever since it was popularised by the launch of ChatGPT in November 2022. Although the insurance sector, with its focus on cost, has generally reacted with interest and excitement to the advent of Generative AI and Large Language Models (LLMs), there are limitations to the technology that represent significant obstacles to industry-wide adoption.

There is so much hyperbole and conflicting opinions around Generative AI in the media nowadays and so many unanswered questions about what the technology is capable of that it can be difficult to take a step back and consider the full potential of the technology across different industries.

For the same reason, it is also challenging to anticipate what the future limitations of Generative AI may be. In the insurance industry, AI has already been widely used in many forms for a range of different tasks. Historically, though, innovation in Insurance has been a slow process, and so naturally, many are wondering how Generative AI might represent a golden opportunity to solve problems across all the different areas of the industry.

It would not be controversial to suggest that the increasing use of LLMs in insurance is driven primarily by the desire for profit and cost-cutting rather than a genuine concern for customer well-being. These models are seen as tools that enable insurance companies to automate processes, reduce costs, and maximise profitability at the expense of individual policyholders.

The Potential for GAI in Insurance

AI deployment within the insurance industry is a case of “quick-quick-slow”, with insurers that initially adopted the technology tactically and sporadically now struggling to scale AI across their enterprise in a meaningful way. These organisations, in particular, are likely to be the ones most curious about how Generative AI might help kickstart AI integration within their business.

The varied capabilities of Generative AI mean it has numerous potential uses for the insurance industry. One of the tools that are fundamental to how many insurance companies engage with customers is chatbots, with most customer service representatives already using finely crafted written scripts to assist them as they engage with customers.

Insurers have been training chatbots on these scripts for years to deal with the most frequently asked customer queries without always needing a human operator. The first generation of chatbots began in 1966 with Joseph Weizenbaum’s ELIZA, but it wasn’t until the turn of the century that chatbot technology began to develop at pace. Early chatbots could only respond to simple questions with limited responses using processes like decision trees and keyword recognition, but since the explosion of Generative AI in the last year or so, the chatbots have gotten so good, often the best way* to know if you’re talking to a human or an AI is that the AI will just be too good: it’s response times, and knowledge of almost any relevant topic will exceed human capacity.

However, if taken to an extreme, this could lead to a prohibitively depersonalised approach to insurance, as the focus shifts from understanding and addressing the unique needs of policyholders to standardised and automated decision-making. Highly accurate, fast responses are great, but a lack of empathy and tailored solutions could potentially leave customers feeling neglected or undervalued.

*The other best way is to just keep asking the chatbots to explain their decisions; if they attempt to do it, they quickly talk themselves into circles and start to spew nonsense.

The Imitation Problem

There is a real risk that insurers take the misguided view that, because Generative AI has made such huge strides recently in a relatively short period of time, the insurance industry should look to roll the technology out across all departments and expect automatic results immediately. In reality, this is an unrealistic and mistaken view. In an industry like insurance, where change is a constant and competition is fierce, innovation must be strategically aligned as well as carefully considered, and there are aspects of Generative AI that are prohibitive to it being widely adopted in the insurance sector.

Although there is much secrecy around the amount of data that systems like ChatGPT are trained on, there is no question that the number is an astronomical one (some estimates put the number of parameters of the underlying model for ChatGPT-4 as high as 100 trillion). This avalanche of data is a key factor in the system’s performance in terms of the speed, accuracy, and relevance of the outputs. In insurance, where data is always being collected at a great scale from an increasing number of connected devices, it is natural to assume that this makes Generative AI the ultimate tool for revolutionising industry practices. But when we consider how Generative AI actually functions, we can see that the truth is not so clear cut.

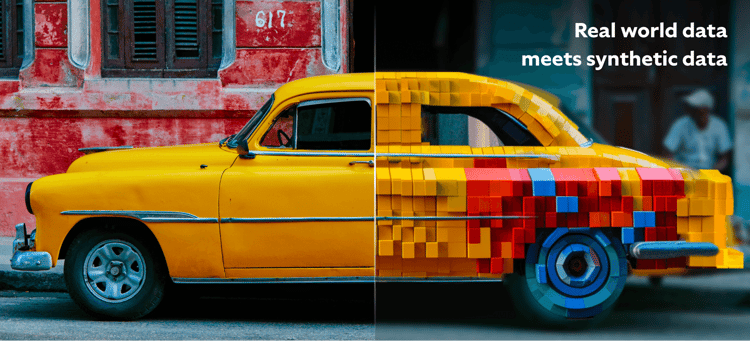

Generative AI uses data gleaned from the real world and uses it to generate, or rather hallucinate outputs that blend multiple data points together based on the prompts you give it. You can use these hallucinations to make inferences about things that might not have been observed or understood previously. The potential applications for such capabilities are vast, from forecasting future events from climate data to developing new treatments from medical data. However, it is important to remember that these hallucinations are exactly that. Hallucinations. Fundamentally they are constructs that only resemble what we see in the real world.

Insurance Requires Real Data

This is certainly not to say that Generative AI has no place in insurance. Aside from the aforementioned opportunity for chatbot technology, there are also many routine business processes used by the insurance community for which Generative AI could be extremely useful. For example, it can framework legal documentation, augment customer communication, and help with the summary and synthesis of large text-based data sets and the generation of marketing copy. These are time-consuming tasks in an industry that relies on delivering fast, agile solutions to customers with high expectations and evolving demands.

Nevertheless, we should consider not only the concerns about the existing capabilities of Generative AI but also the fact that there is so much about the technology and its potential that we have yet to understand fully. Meaningful progress in insurance often relies on observing, understanding, and reacting to changes in the complex real world, and to achieve this, you need information that is real and measurable and whose authenticity you can rely on. At this point in time, Generative AI is not at a stage where it can be universally adopted across all departments in all industries.

Demonstration of generative AI creation from a user prompt.

Although Generative AI has the potential to revolutionise the insurance industry by providing new ways to assess risk and create personalised policies, like any new technology, it also comes with a new set of risks and challenges.

Generating bias - One concern around the adoption of Generative AI in insurance is the real potential for bias in the data used to train the algorithm. Unfair treatment and discrimination towards certain groups of individuals have already occurred as a result of decisions made by AI, and the same could occur with Generative AI if the data input is biased or incomplete. The level of secrecy around Generative AI training data merely exacerbates these concerns.

Generating backlash - The potential consequences of failing to consider these concerns include significant regulatory backlash and reputational harm for the insurer, both of which can be incredibly damaging in an industry where the margins are so small, and reputations are hard won and easily lost.

Generating inscrutability - Another challenge could be the difficulty of explaining the AI-based models to regulators and customers. Regulators may require companies to provide clear explanations of how the system is making decisions, which can be challenging when the algorithms use complex and non-transparent techniques.

Generating privacy concerns - The use of LLMs in insurance raises significant privacy concerns. These models process vast amounts of personal data, including sensitive information about individuals' health, finances, and lifestyles. The collection, storage, and utilisation of this data by LLMs can raise concerns about privacy breaches, data security, and potential misuse of personal information.

Generating regulations - The consequences of non-compliance when it comes to regulations can be severe. We have already seen companies like Meta given huge fines as a result of failing to comply with EU GDPR regulations. Insurance companies are not exempt from these regulations, and as the technology continues to develop and new concerns and risks are discovered, regulatory pressure will likely only increase. The need for explainability when it comes to AI has never been more pressing.

The danger is that the rapid advancement of Generative AI technology has outpaced the development of ethical guidelines and regulatory frameworks. Plenty of industry commentators are arguing for stricter regulations and oversight to ensure that insurance companies using LLMs are held accountable for the fair and ethical treatment of policyholders.

Responsible AI

There is no doubt that Generative AI has the potential to transform the insurance industry, enabling companies to enhance risk assessment, fraud detection, and claims processing. However, adopting responsible AI practices is crucial to ensure fair and unbiased decision-making. Failure to do so could bring the entire industry into disrepute, inviting further unwelcome legislation. Company regulations and control must be in place to protect the privacy of policyholders and prevent discrimination. To maintain customer trust and uphold ethical standards, it is essential for insurers to prioritise responsible AI practices throughout their operations.

These responsible practices must be undertaken beyond the simple scope of regulatory compliance. They must be ingrained in the daily operations of the entire organisation. Decisions that are made in the insurance industry have a real impact on people’s lives, and so the AI that will help to guide and inform these decisions must be held to the highest standards of responsibility and explainability. If done correctly, then this will hold the key to AI in general, potentially including Generative AI, creating real and lasting value for businesses and customers alike.

Enjoyed this blog? Check out 'What makes a machine learning model ‘trustworthy’?'

When used by malicious actors or without considerations for transparency and responsibility, AI poses significant risks. Mind Foundry is working with...

AI and Machine learning is a complex field with numerous models and varied techniques. Understanding these different types and the problems that each...

While the technical aspects of an AI system are important in Defence and National Security, understanding and addressing AI business considerations...