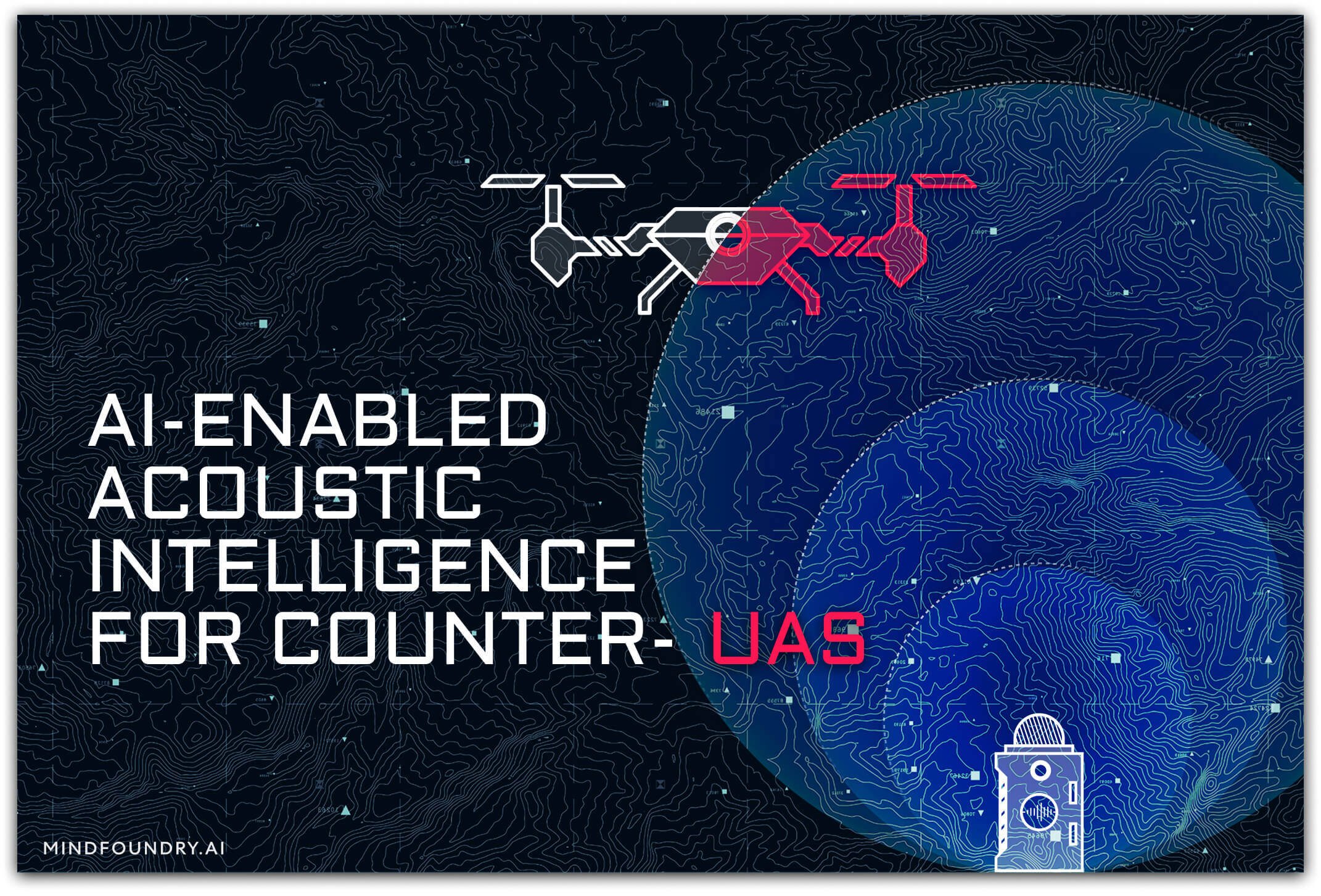

AI-enabled Acoustic Intelligence for Counter-UAS

Unmanned Aircraft Systems (UAS) are now a staple of modern warfare, now responsible for 60% of targets destroyed in the Ukraine-Russia war....

Generative AI has exploded into the public eye, with the conversation focusing on the opportunities and risks it presents. However, in the Defence sector, there are specific challenges and problems that this technology is simply not suited to solve.

One doesn’t need to look far to find an article or opinion about Generative AI. Open AI’s public release of Chat GPT in November 2022 and the update to GPT-4 last month introduced the wider public to this groundbreaking new technology. Organisations across all sectors are exploring how this technology might revolutionise how they operate and solve problems that seemed intractable just months ago. There is a strong case to be made that progress deploying AI in Defence is currently painfully and needlessly slow (a case that I will make another time) and that there should be frustration both internally and with the supplier base. Despite this frustration, Generative AI doesn’t offer the right answers for complex challenges and isn’t a role model for introducing and integrating AI in Defence.

The Rise of Generative AI

Generative AI falls under the broader category of Machine Learning and describes AI systems that can learn from ingesting data and use this learning to create entirely new and original content, not just text but also images, music, and video. Large Language Models (LLMs), in this context, are seen as a subcategory of Generative AI that refers to foundational models trained on natural language data in order to create original content that imitates human written speech by predicting the next word to use.

Arguably, the most well-known example of Generative AI is ChatGPT, built on top of OpenAI's GPT-3.5 and GPT-4 families of LLMs, which can respond to a written prompt from a user with a detailed response gleaned from text data from the Internet. A user might type into ChatGPT, “Tell me why innovation in Defence is so slow”, and a credible first answer will appear, describing the problem with a level of intelligence that is startling, especially the first time you do it. You can then ask more questions or tweak the initial response. For example, if the first generation of text was too intellectual, you could say, “Do that again, but in 30 words or less and in a style that even my teenager could understand.” Similarly, you can do this with images, “Make me an oil painting of a whale leaping out of the ocean”.

Demonstration of textual hallucinations prompted by the author. Source: ChatGPT.

Demonstration of textual hallucinations prompted by the author. Source: ChatGPT.

The recent developments in Generative AI have led to widespread discussion and debate around the future of the technology, and depending on which end of the hyperbole spectrum you sit on, it is either an overhyped gimmick for generating amusing responses and images or it’s the first step to achieving Artificial General Intelligence (AGI), a technology so potentially world-changing in its power that some are encouraging the media to refer to it as “God-like AI”.

Whatever your thoughts are on Generative AI, there can be no doubt about the speed of its development and the potential for it to change our lives. Nevertheless, this rapid progression gives the wrong impression that this is the exemplar of how AI, in general, can be employed and scaled across every application. It’s this false equivalence that may cause leaders in Defence to judge the pace of development and the scale of deployment of AI and to impulsively decide that Generative AI might be the ideal solution to all their problems.

Defence Data is Different

Much of Generative AI’s power derives from the sheer volume of data on which the underlying models are trained, with estimates indicating that GPT-3 was trained on around 45 terabytes of text data, with the model itself having 175 billion parameters. For its successor, Chat GPT-4, that number of parameters reportedly skyrocketed to as many as 100 trillion. When predicting the next word to use in response to a prompt, it helps to have been trained on almost all the text data that the Internet has to offer.

With such an abundance of training data, it’s no surprise that the outputs from Generative AI are so accurate in how they imitate real-world environments and information. But the fact that these outputs are mere imitations is a critical part of the problem with applying Generative AI in Defence. With Generative AI, you're taking observations of the 'real-world landscape' through your data and using these to try to infer what exists in parts of the landscape that you haven't directly observed. So although the outputs of models are driven by real-world data, they are still fundamentally artificial constructs that merely reflect, not represent, the real world. Success in Defence often relies on observing, understanding, and reacting to changes in complex and challenging environments, and to achieve this, you need information whose authenticity and accuracy you can trust.

This is not to say that Generative AI has no place in Defence. For many routine business processes used by the Defence community, Generative AI can be extremely useful. For example, it can support standard contract generation and review, framework legal documentation, summary and synthesis of large text-based data sets and the generation of marketing copy. However, these capabilities do not translate across all use cases in Defence, where the process of turning raw data into actionable intelligence is hosted in myriad technical and physical environments, from a central processing hub in a building to the control centre hosted in a ruggedised large vehicle or contained within the sensor itself at the edge of a battlefield. You might be “fighting with the lights off” with no bandwidth or connectivity, or you might be centrally processing data in a post-mission environment. All these complications require differing and targeted solutions in order to overcome them.

Generative AI as a technology is in its infancy, and what we know about its efficacy in different environments for different applications is very limited at this stage. In any case, there is no one-size-fits-all solution for Defence when it comes to AI. What is needed is a more nuanced approach that starts with the problem and works its way forward with AI solutions that can help achieve operational objectives.

Lack of Transparency

Another area of concern with Generative AI surrounds the data used to train certain models, whether this information is made available to the public, and if the model has the transparency required for you to understand how they are making decisions, generating outputs, etc. There is a huge amount of secrecy around the data that companies like OpenAI use to train their models, as the few major tech companies with the necessary capital and computing power to develop Generative AI compete to be at the forefront of the next big breakthrough.

When they generate their outputs, there’s no explainability or provenance; you have no way of knowing how the model arrived at that particular output from the data on which it was trained and what that data was. If you’re using Generative AI recreationally to create an original image or piece of music, then model transparency might not be that important to you because your goal is just to create something unique and original. But in Defence, decisions based on the outputs of AI models can have a significant impact on people’s lives, which means we have to hold these models to the highest possible standard of explainability. This is something that just isn’t currently possible with Generative AI. To what degree transparency and explainability will be sacrificed by the big players to maintain a commercial edge remains to be seen.

True? Or Truthy?

One of the most problematic complications with Generative AI is the “hallucination” issue. Contrary to popular belief, Generative AI hallucinations are not only the ones where the output of the model is incoherent or inconsistent with the real world… Every result of a generative model is a hallucination. Sometimes the hallucination is better than others, and the words it creates are factually accurate. Other times, it’s worse, like when it confidently writes a synopsis of a Dostoyevsky novel that never existed. But whether the output actually lines up with the world or not, make no mistake, it’s hallucinating.

Generative AI can create original content in a variety of forms with incredible levels of detail, but fundamentally it is neither concerned with nor capable of understanding truth or reality. These are ideas that simply exist outside of its parameters and its capabilities. It merely attempts to generate outputs that closely represent the prompts and commands it is given. In Defence, the quality and veracity of the information that informs your decision-making are of paramount importance, meaning you can’t have doubts about whether your model’s outputs are consistent with reality.

Generative AI visual hallucination of a whale jumping out of the ocean. Source: Midjourney.

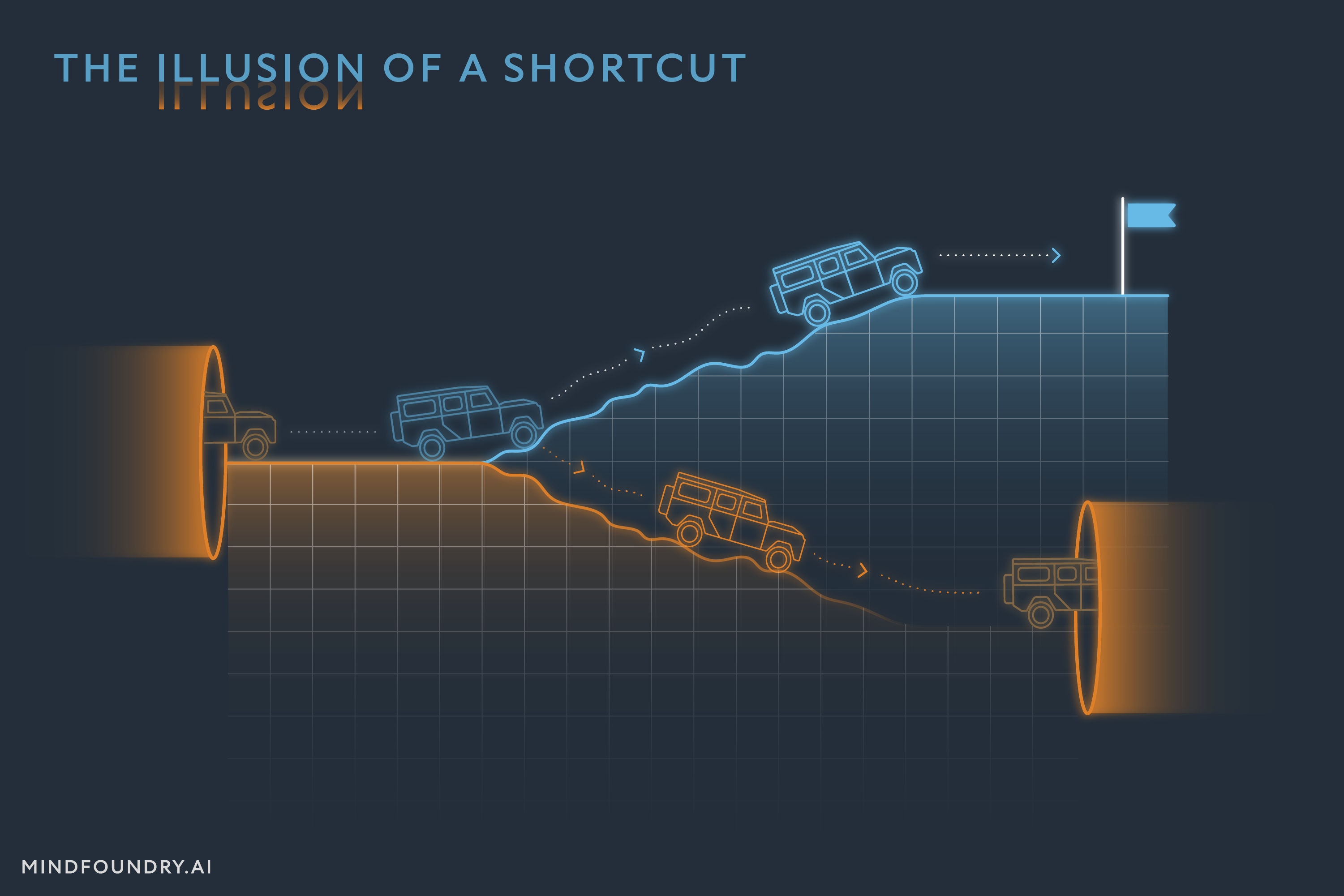

Generative AI has its place, but not at the expense of Responsible AI

There is no question that Generative AI is a revolutionary technology that has an important role to play in all fields. But it is simply not a silver bullet. The unique requirements of high-stakes applications, especially those we find in Defence, mean that they must be approached with the same or greater care you would use with critical systems like autopilots or fire control. Though it is clearly not the universal shortcut that many hope it is, it may one day play an important role in complex multi-agent systems that understand and account for the risks it brings with them. No matter how the technology and our relationship with it evolve, progress should never come at the expense of responsibility.

Enjoyed this blog? Check out 'AI for Defence: More than just an innovation opportunity'.

Unmanned Aircraft Systems (UAS) are now a staple of modern warfare, now responsible for 60% of targets destroyed in the Ukraine-Russia war....

In Defence and National Security, mission-critical data often emerges from a multitude of different sensor types. With AI, we can bring this...

When used by malicious actors or without considerations for transparency and responsibility, AI poses significant risks. Mind Foundry is working with...