AI for Sensor Fusion: Sensing the Invisible

In Defence and National Security, mission-critical data often emerges from a multitude of different sensor types. With AI, we can bring this...

AI is everywhere. It has rapidly become an integral part of how we organise our lives, go about our jobs, and get from place to place. And yet, in our discussions with customers, we often encounter a wide range of misconceptions about what AI actually is. In this article, we aim to shed some light on what this technology is and how it works, but also on where AI began, who the pioneers were that paved the way, and where all of this is heading.

We spoke to Mind Foundry’s co-founder Stephen Roberts, who, as a professor of Machine Learning at the University of Oxford, has been at the forefront of AI research and innovation for over 30 years.

Where did AI begin?

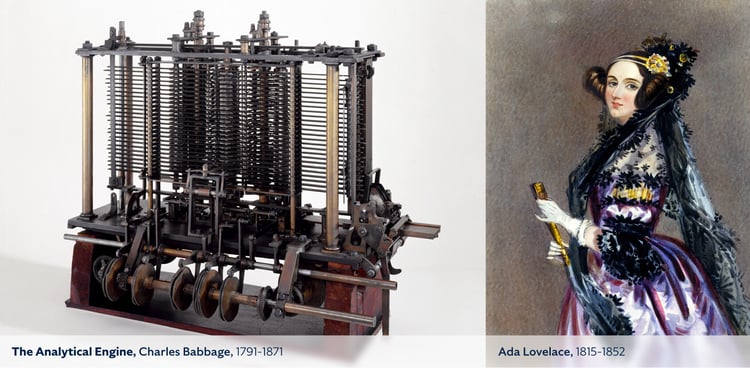

Professor Roberts: In my mind, AI had its beginnings many centuries ago. Some of the first people to talk about AI in a modern guise were in the 19th century, including luminaries such as Ada Lovelace and Charles Babbage. Lovelace and Babbage had aspirations to understand the mathematical equations that govern consciousness - the understanding of how we, as human beings, achieve conscious action and agency. Their work directly led on through to the 20th century and much of what we do today. They were the predecessors of legends such as Alan Turing. So, although AI itself and the study of the mathematics and computation of conscious decision-making and action have a very long history, it's only in the past few decades that we have become truly aware of the possibilities of what such mass mathematics and computer science could do for the world.

Why did AI begin?

Professor Roberts: It’s worth seeing AI as part of a wider revolution in the automation of human reasoning, hand-in-hand with the philosophy of automation and our role as human beings alongside it. It speaks to the philosophy of people like John Maynard Keynes and others who strived to understand how we, as humans, can work with automation to get the best out of each other.

This goes right the way back to the machine age and the Industrial Revolution of the 18th century through to the 19th century and aforementioned computer visionaries like Ada Lovelace and Charles Babbage, along with people who worked on economic theory, to understand how automation was going to affect society and economics. People like Keynes aimed to understand how automation could potentially displace workers, an idea that's as important today as it was in the 19th century.

Then we have the classic era of symbolic solutions and the digital repetition revolution that took place from the fifties onwards. That is something that has really spearheaded the modern AI revolution, and from the 1970s onwards, we have seen an exponential rise in automation and intelligent algorithms.

What are the key moments in the history of AI?

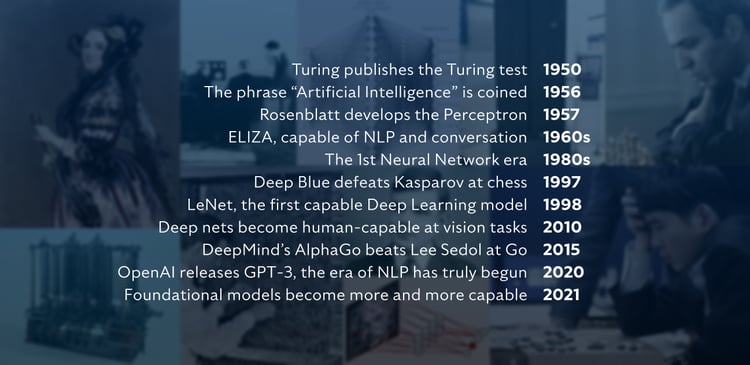

Professor Roberts: To my mind, AI starts with Alan Turing publishing the Turing Test. The question Turing was essentially asking was, what does it mean to be human and intelligent?

The phrase “Artificial Intelligence” was actually coined in the mid-1950s. It went hand-in-hand with pioneers like Rosenblatt, who created the single-layer Perceptron, which was one of the first algorithms that ran on an all-purpose digital computer and would try and learn from examples. This was followed in the 1960s by the first precursors of natural language processing which were language models like ELIZA. The very first neural network era began in the 1980s, culminating in impressive developments such as Deep Blue, the IBM all-purpose digital algorithm, which amazingly beat Garry Kasparov, the then grandmaster champion, at chess.

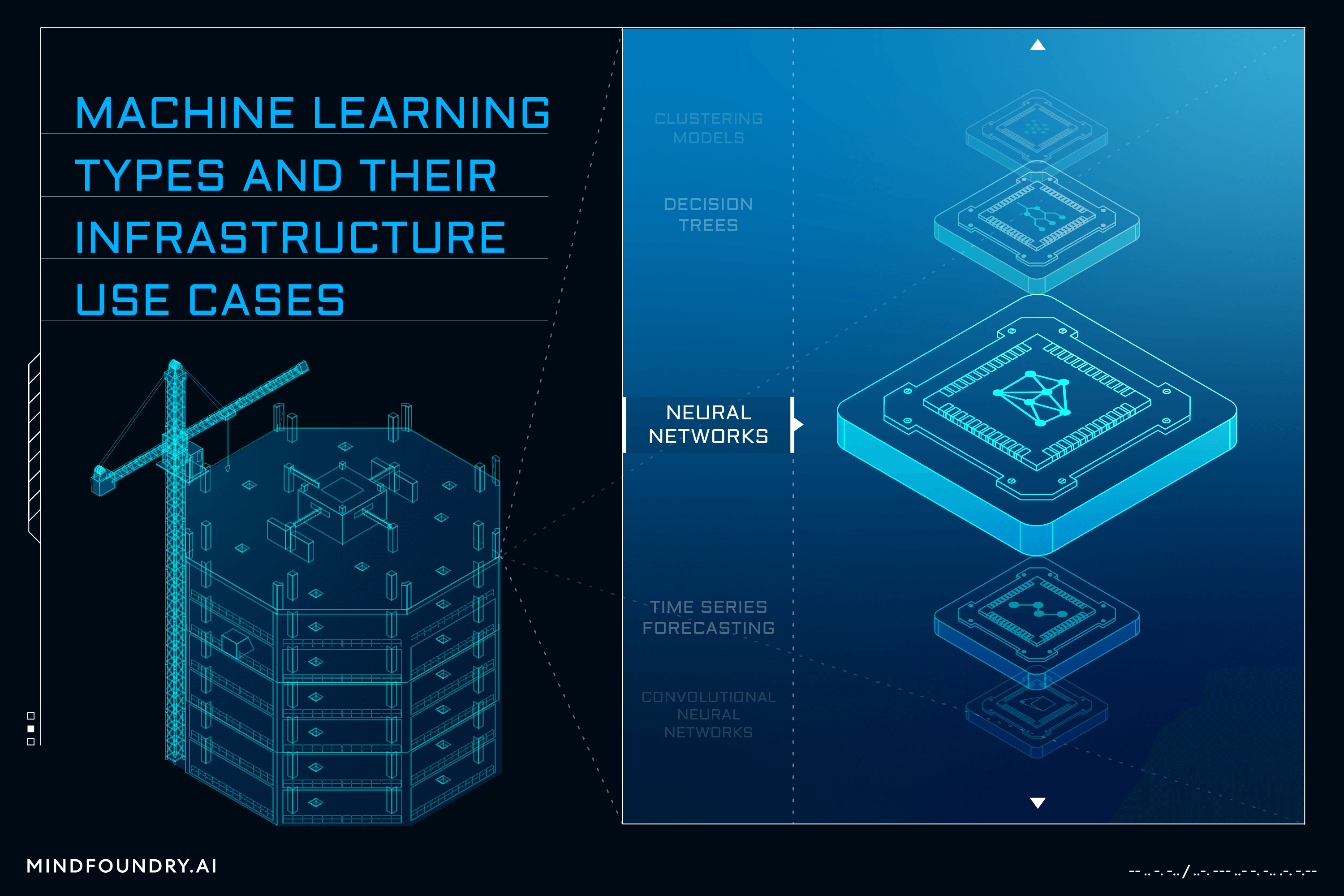

In the late 1980s, we started to see the impressive behaviour of things like convolutional neural networks pioneered by the likes of Yann LeCun and the way in which they could achieve humanlike performance in image processing. Then we have pioneering landmarks such as Deepmind's Go algorithms, which very famously beat the then-champion, Lee Sedol, at Go. This led directly to incredibly impressive advances from not only the academic world but also, importantly, the commercial world, who were able to scale these amazing phenomena and algorithms.

What can’t AI do?

Professor Roberts: At Mind Foundry, and certainly as academics, we know very clearly what AI is not. It's worth saying that AI is not a cure or a magic formula that solves every problem that we see out there in the world today. We can't just throw AI at our problems and hope that it will immediately solve absolutely everything. For all of us in Mind Foundry, it is maths, not magic. It is based on principles of science, not on the hope that scale alone will solve a problem.

How does AI work?

Professor Roberts: Essentially, in order to understand AI’s concepts from a mathematical and computational perspective, we just need a computer. A computer is a logic engine, and one of the world's first real computers was Babbage’s analytical engine which was built in the early part of the 19th century. This was a mechanical computation engine, but it has all the components of a modern digital computer, including the ways in which it is a general-purpose computational engine that can be programmed. This means that we also need somebody who is going to do the programming. The same is true today of AI, where human input is still an influential and integral part of the process.

How is AI different from classical programming?

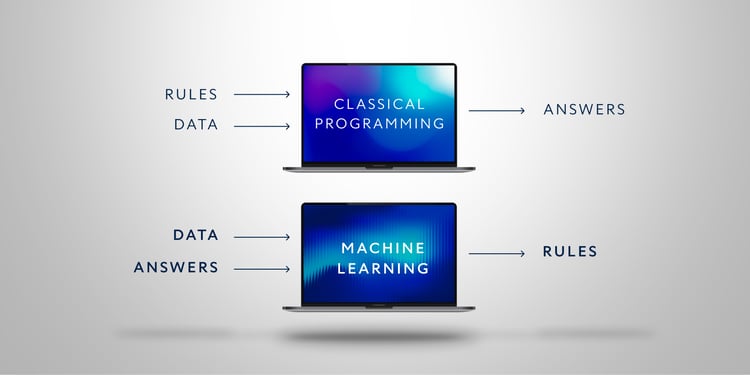

Professor Roberts: With classical algorithms, what we do is program the rules of a solution into the solution itself in order to get answers. What makes AI different is that rather than writing an algorithm to create the rules, we write an algorithm that allows the computer itself to learn rules from data. This is what we mean by machine learning. What the algorithm is really doing is learning a relationship between what it observes and what the ideal answer should look like. We can use this training or learning process to define a strategy, a set of rules, or simply a response to a given observation.

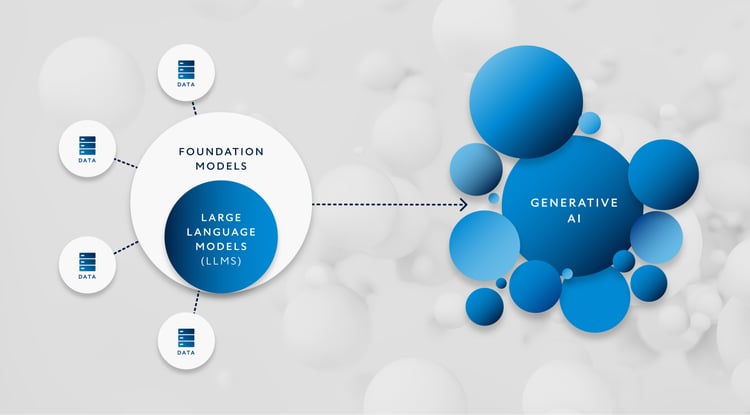

Huge leaps forward in designing algorithms that learn from very large sets of data examples are why, in the past few years, we have seen OpenAI coming into the commercial mix alongside Amazon, Facebook, and Google, releasing large natural language processing algorithms such as GPT-4. These kinds of models have caused enormous excitement across the world because of their ability to generate human-like responses in natural language.

This brings me back to Turing and the 1950s to say, what does an algorithm look like that can mimic a human being in a task? That doesn’t mean it is human, and it doesn't mean it's necessarily conscious in any way, but it does mean that we've found a recipe for creating algorithms that can learn how to create solutions that can fool human beings, producing answers that are so human-like we feel compelled to ascribe human-like agency to the algorithm. I'd argue generative and foundational models, like GPT-4, are able to do exactly that.

What’s next for AI?

Professor Roberts: The evolution of AI technology is something that is phenomenally complex. Whereas the AI of the 20th century was relatively limited, now we are seeing a swathe of new, potentially revolutionary technologies emerging. Amongst these approaches are things like uncertainty-aware AI, metalearning, ensembles of models, large language models, generative models, human-centric AI, trustworthy & responsible AI and transparent, interpretable AI. In terms of the hardware we run these models on, we are yet to see the final role that quantum computation is also going to play. At Mind Foundry, we have the privilege to be playing a part in this very long journey that's been hundreds of years in the making, and not just to play our small part but, through our liaison with academic partners, to try and make sure that this small part has a really positive impact on the world of technology.

Enjoyed this article? Check out Stephen Robert’s full webinar on ‘Explaining the Origins of AI’.

In Defence and National Security, mission-critical data often emerges from a multitude of different sensor types. With AI, we can bring this...

When used by malicious actors or without considerations for transparency and responsibility, AI poses significant risks. Mind Foundry is working with...

AI and Machine learning is a complex field with numerous models and varied techniques. Understanding these different types and the problems that each...