Insuring Against AI Risk: An interview with Mike Osborne

When used by malicious actors or without considerations for transparency and responsibility, AI poses significant risks. Mind Foundry is working with...

3 min read

Mind Foundry

:

Aug 2, 2021 3:00:00 PM

I would be surprised to find anyone who works in the tech sector, especially if they’re working with data, who hasn’t seen a significant emphasis on ethical applications of AI, or “doing AI ethically” (even the Pope has got involved!). Conferences, research, blog posts, videos, thought-starters are all - quite rightly - honing in on arguably one of the most important considerations of the 21st century: how do we build AI to the benefit of humankind?

To some aspiring to answer this question, this might signify decades’ worth of research. To others, it’s millions of hours of person-time in algorithmic design or troubleshooting software. The responsibilities to getting this right extend beyond this to policy, regulation, education, investment… the list goes on.

But the list isn’t the only thing that goes on; as I’m writing this, thousands to millions of companies around the world are grappling with adopting AI right at this very moment. They don’t have decades or even years to play with… they need it now. As I mentioned in a previous blog post, there’s a race on to get the most out of AI adoption before it’s too late. Currently, in the UK alone there are over 1400 high-growth AI startups and scale-ups, and this doesn’t even count the vast swathes of commercial and public sector adopters outside of the AI industry. This is a real challenge.

So how can you do it ethically? Or responsibly? Or is that even technically possible right now? Let me answer by addressing some of the most common questions that we’re posed at Mind Foundry.

Where do I start?

Congratulations, you already have! Becoming aware of the issue is the first step. Understanding that there are a whole host of considerations above and beyond accuracy that you need to pay attention to is the first step (you can read here about some of the top ethical considerations for AI in the public sector).

It also depends on who you are: are you building your own AI systems, are you the user of one, or are you responsible for purchasing an appropriate AI solution? Perhaps you’re all three. One of the most common misconceptions about ethical AI today is that it is the sole responsibility of the people creating the systems. That’s not at all the case. An AI solution deployed in one company might meet all of its ethical targets, but the same system deployed in a different organisation might not. Thinking of your use of AI as a combination of your technology, your people, your purpose, your data and your intended beneficiaries will help you decide whether or not a particular solution or system is right for you.

How do I know if the AI I’m producing or using is ethical?

There is never going to be a guarantee, which is why companies that are taking it seriously are starting to invest in their internal processes and ethical checkpoints. We get asked all the time about whether you can build AI that is “regulatorily compliant”. The answer is, “of course” (and you should!). But care should be taken as regulation in the AI space is still in its infancy, and being compliant with the law does not take into account all of the major ethical considerations.

If my AI is ethical today, will it be ethical tomorrow?

Yet again, not necessarily. AI is a complex product of both data and algorithms, and in applications where the decisions made by AI directly affect humans, considerations need to take into account how the data and models are changing.

For example, if a particular model is designed to serve a particular group (say, for example, electric vehicle (EV) owners) you will tailor some of your design decisions based on the population that you intend to serve. Say, for argument’s sake the average EV owner is white, male and in his fifties - then your model will be designed to serve this population very effectively. However, we know that the demographic of EV owners is likely to change over the next few years, based on Net Zero targets from the government and the increasing competency of EV technology. Your model does not know that. So even if you design the best model for your current situation, and take all the ethical considerations into account today, it could be unintentionally disadvantaging your intended population in the future because the world around it has changed. A key part of your commitment to ethical AI must be a commitment to its future purpose as well.

What support is there to help me?

Thankfully, quite a bit! There are “ethical consultancies” popping up in the UK that guide you through some important considerations. Globally, there are independent centres (like RAI) that offer guidance and information about how to approach this from a practical perspective. The mark of a good AI solution provider is one that talks to you upfront about how to address the ethical elements of your solution design, as well as the technicalities. We at Mind Foundry offer an Ethical AI Project Design service that can help you take some of those first steps.

Embedding ethical design within global applications of AI is going to be one of the most challenging demands of the 21st century, yet one of the most important. As regulation evolves and machine capabilities improve, it’s the humans in the driving seat of usage, research, implementation and design which will guide our collective capabilities towards a truly human-centric AI.

When used by malicious actors or without considerations for transparency and responsibility, AI poses significant risks. Mind Foundry is working with...

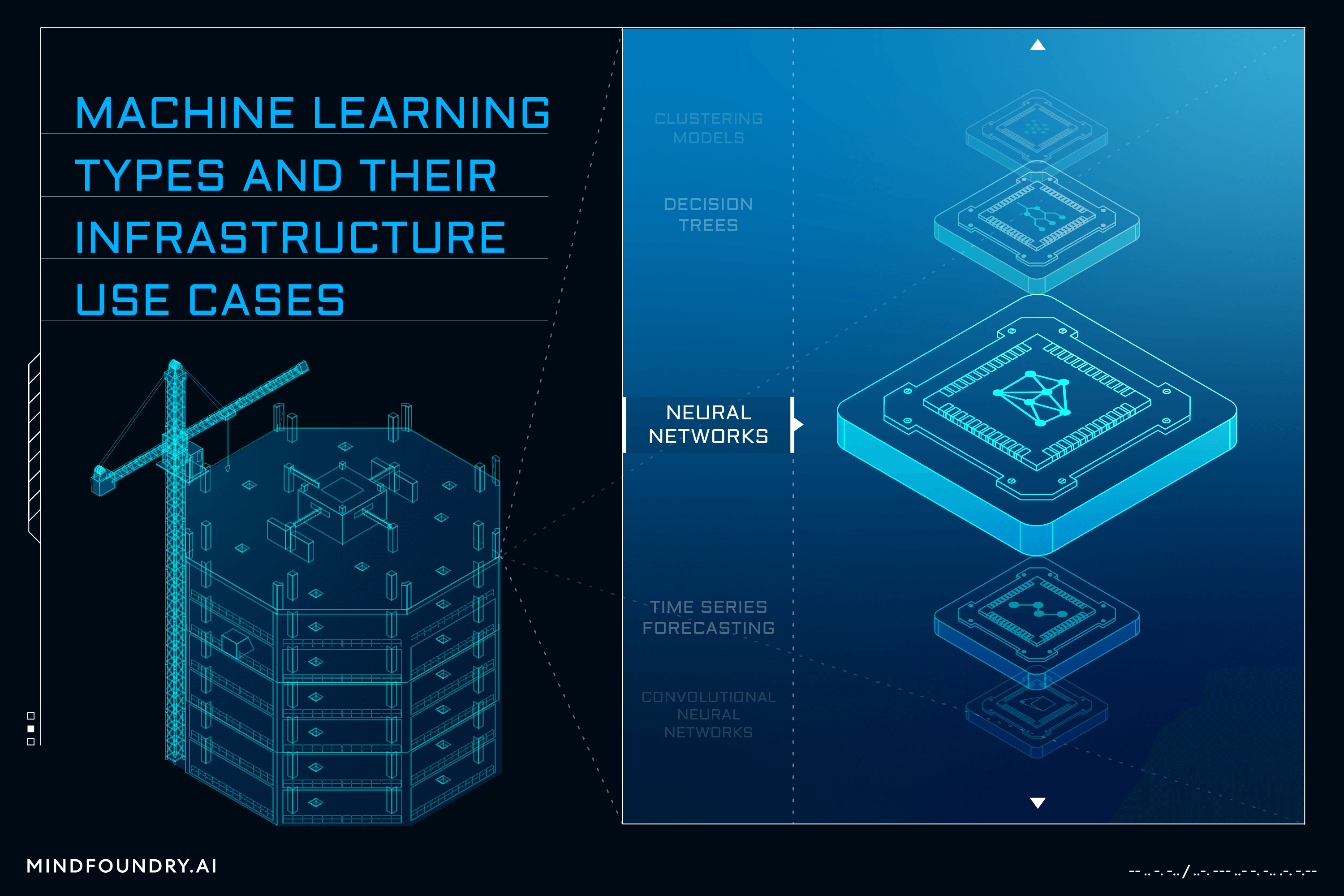

AI and Machine learning is a complex field with numerous models and varied techniques. Understanding these different types and the problems that each...

While the technical aspects of an AI system are important in Defence and National Security, understanding and addressing AI business considerations...