Insuring Against AI Risk: An interview with Mike Osborne

When used by malicious actors or without considerations for transparency and responsibility, AI poses significant risks. Mind Foundry is working with...

The term “Human-in-the-loop” is used as an effective countermeasure to the concerns surrounding the unfettered use of AI. However, as AI becomes more ubiquitous in society and more integral to high-stakes applications in Defence & National Security, it’s important that we understand why the term has been used, what its limitations are, and why it may be time to consider a new approach to de-risking AI’s use in the most important use cases.

Human vs Machine Intelligence

There is a fundamental difference between human and machine intelligence. Even the most recent advances in AI, enabled by access to huge labelled datasets and computing power, don’t get close to the sophistication of the human brain. Improvements in algorithmic efficiency and the exponential growth in available processing and training compute will probably drive these advances forward for a while longer. Eventually, though, the training data will run out, and there is a risk that a feedback loop, where AI is trained on AI-generated data, will result in outcomes that take us further away from the exponential improvements in model performance that we strive for.

Despite the efforts and claims of the major AI players, machines are highly unlikely to ever feel empathy towards humans or other machines and certainly not in a sense that humans would recognise. Their understanding of the context in which they operate and the second and third-order effects and implications of their decisions or predictions probably won’t develop along the same lines as that of the human brain. In some use cases, this isn’t an issue, but in high-stakes applications like defence, contextual understanding and empathetic foresight are necessary to make decisions that create good outcomes.

Seeing through the Fog of War

War is the ultimate high-stakes human endeavour, and it rarely unfolds in a linear or predictable manner. The number of interdependent and variable components in the competitive and congested battlespace creates uncertainty of outcome at a macro and micro level, and it is this uncertainty that makes this environment so challenging for impactful AI systems to be deployed. When faced with uncertainty, human qualities like empathy, domain experience, and instinct can be the difference-makers.

However, the ability to apply emotional intelligence to a situation is a unique human characteristic, as we’re the only ones who can generalise well beyond the data we are presented with and understand the wider context. There is a reason that one of the most enduring tomes on the principles by which war should be waged is called “The Art of War” and is thousands of years old. The unpredictable nature of warfare remains constant; it is merely the character that changes. Von Clausewitz, the oft-quoted and more recent philosopher on the nature of war, suggested that war could not be quantified and leaders needed to rely on their ability to make decisions amidst chaos.

We may be suffering from recency bias with regard to the impact of technology on war. Since the dawn of humankind, the ways people engage in warfare have undergone numerous evolutions, but revolutions have been few and far between. The invention of gunpowder was arguably the first true revolution in warfare due to the way it eventually obsoleted knights and feudalism. Nuclear weapons, the second revolution, were meant to provide a form of global stability through mutually assured destruction. Technology and automation are being touted as the third revolution, but the enduring participant through all of these revolutions is the human, and we should take care to ensure that we fit the technology to humans, not the other way around.

Solving a Complex Problem Requires Contextual Understanding

Machines and machine learning are brilliant at sifting vast quantities of data of different types, identifying patterns, and making predictions. What they lack is the ability to provide context or emotion. The phrases human-in-the-loop and human-on-the-loop were originally formulated as technical engineering terms to illustrate when humans are involved with the setting up of the AI systems and then tuning and testing the models. The phrase conveys little actual human involvement in the loop; the AI makes a decision or prediction, and then the human uses this to inform their own decision, with interaction being superficial at best.

However, its meaning has slowly changed over the years as it has entered common parlance. Now, the phrase creates the often-illusory impression that humans have ultimate control over the AI system and can provide a check and balance to the performance and outputs. It creates a sense of human control and pre-eminence, which is especially important in high-stakes applications like Defence and National Security. It’s a supposed check and balance on the flaws in AI, an antidote to hallucination or some inexplicable prediction.

The relationship between humans and machines lies at the heart of the challenge. While it’s widely accepted that we want to combine the best of human and machine capabilities, the nuances of human ability often get lost in the discussion. Not everyone is destined to be a research scientist or a Prime Minister, and we have established ways, albeit imperfect ones, of matching people to roles that suit their strengths. Similarly, while AI is powered by complex machines, we often reduce it to a single, overly simplistic idea, stripping away the inherent uncertainties. We frequently leap from ‘AI could do this’ to ‘AI will do this’ in a way reminiscent of Descartes’ leap from ‘I think’ to ‘I am,’ creating an unwarranted certainty about AI’s present and future role.

Inadvertently, this has created the impression that humans are a regulating or controlling element in support of the optimisation of machine performance. AI-in-the-loop gives a different view, using machines to optimise human performance. Instead of inserting humans into machine workflows, insert machines into human ones. Instead of using humans to solve machine problems, use machines to solve human ones. It changes the dynamic from a machine problem to a human one.

As Mind Foundry co-founder Professor Stephen Roberts highlights, “Many high-stakes application areas require people to be the responsible agents, not AI. So if we're going to work in these areas where people have the agency and the overall decision-making capability, we need to help augment them using AI to accelerate and give them extra capacity, especially in overwhelming data scenarios. That means that AI, rather than being the dominant factor in a loop of observation to action-taking, is actually relegated to being a support structure to help the human chain of command. That is AI in the loop, and that absolutely has its place in many high-stakes areas.”

If one takes the OODA (Observe, Orient, Decide, and Act) Loop approach to decision making, machine learning processes could be inserted into each stage in several different ways, but all working for the human. This relationship should be determined by the parameters of the human/machine interactions. The speed of decision-making might improve as more useful insight is extracted and funnelled to the right places quicker, but it might create a similar overload problem, so we go back to square one. The understanding of risk might improve, but the appetite for taking risks will remain a human judgment.

Clear Decision-Making in a Murky World

We live in an imperfect, unstructured and chaotic world, a world in which the human brain has evolved to interpret and contextualise. Machines can scan huge, multi-spectral data sets continuously and then present patterns or risks. They can then provide options for decision-makers, no matter where the battle is taking place, be it land, air, sea, space or cyber. Once the decision is made and action is taken, the machines can review outcomes, providing rapid feedback loops to improve performance for future actions. But all of these wonderful potential applications of AI must be geared towards optimising human decision-making. The loop is human. Let’s augment and extend it with AI.

Enjoyed this blog? Read our piece on the Human vs AI Trust Paradox.

When used by malicious actors or without considerations for transparency and responsibility, AI poses significant risks. Mind Foundry is working with...

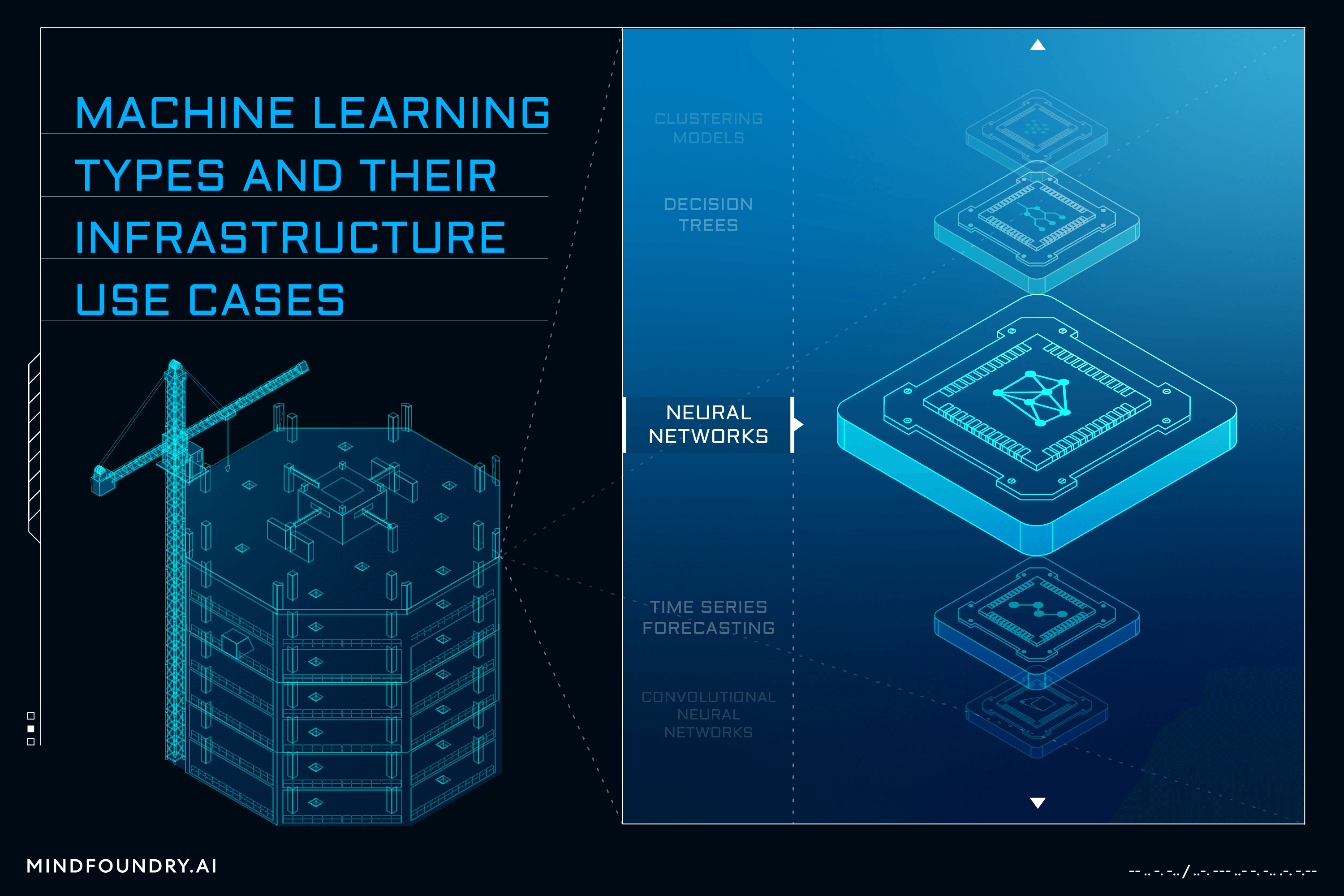

AI and Machine learning is a complex field with numerous models and varied techniques. Understanding these different types and the problems that each...

While the technical aspects of an AI system are important in Defence and National Security, understanding and addressing AI business considerations...