Insuring Against AI Risk: An interview with Mike Osborne

When used by malicious actors or without considerations for transparency and responsibility, AI poses significant risks. Mind Foundry is working with...

In defence and national security, the nature of problems and the environments in which they occur make operationalising AI hugely challenging. This is especially true of the maritime domain, where threats are becoming more prolific and advanced. Although sensor technology and computing capabilities have improved and reduced in cost, the resultant explosion of data volumes brings a new challenge in processing that is saturating operators’ cognitive bandwidth. AI has the potential to be a game-changer in helping overcome this issue and must navigate obstacles in three key areas for this to become a reality.

In April of 2024, Mind Foundry’s Director of Intelligence Architecture, Alistair Garfoot, delivered a paper and presentation at the Undersea Defence Technology conference in London examining the challenges of operationalising AI in Defence and opportunities to accelerate its impact. We’ve summarised the key themes from his presentation and made them accessible in the piece below.

AI is Not an Innovation Opportunity

A common mistake made by those pursuing AI capability is to begin with an innovation mindset. Given the relative nascence of some techniques, it is often viewed as unproven technology, resulting in a drive to concentrate risk in the technology itself and minimise operational risk by exploring use cases which are not operationally critical. The goal becomes to test AI’s potential rather than to achieve operational benefit, at the same time reducing operational risk by focusing only on problems where there is little impact from project failure. The flip side is that successful projects lack sufficient buy-in, budget, and support to carry them through to production, as the problems they are focused on aren’t important enough to drive this outcome. As detailed by Gartner, 46% of projects never make it to operations, and from our experience in defence and national security, we strongly suspect this number is higher.

This focus on technology over operational impact also leads to various other difficulties. Sometimes, focusing on “using AI” results in other technologies being “taken off the table” unnecessarily in solution design, as the priority is to demonstrate AI use rather than actual operational benefit. In many successful use cases, AI capability is actually only a small part of a larger processing pipeline that includes other, more traditional techniques. Ultimately, the key to operational success is to take a problem-first approach and use AI only where it is needed rather than a technology-first approach with the goal of using AI in as many places as possible.

Finally, the focus on technology can reduce access to users and associated feedback, as interaction may be withheld until technical proofs of concept are completed. Proceeding without this critical information leads to systems optimising for arbitrary data-scientific metrics, such as accuracy or precision, which make little sense to real users. This causes a significant barrier to conveying system relevance and potential value, hampering chances of further development and adoption. In complex settings like maritime defence, there is a pressing need for systems designed specifically to solve the problems of their operational users. A user-centric approach is essential to ensure that the right questions are being asked and that these questions are asked and validated continuously as system complexity grows.

The Realities of Working in Defence

While the defence industry’s understanding and treatment of AI as an innovation opportunity demonstrates room for improvement, to work effectively in defence, AI providers must also adjust their behaviours. It’s not uncommon for providers to have unrealistic expectations, such as sharing large, labelled datasets - a challenge in sensitive environments where the historical focus has not been on data architecture. This reality needs to be accepted rather than railed against. Providers must often work with data owners and customers in defence and national security to navigate these issues and gradually improve processes and understanding. Using the issues as an excuse for lack of development is naive and unhelpful. It is, in fact, possible to make progress in these challenging contexts using alternative architectures or adjacent problems, and AI providers must be aware of and prepared for this.

Problems are not limited to the technology space, with issues also surfacing commercially. The complexity of both AI technology and the most challenging problems in defence means that it takes significant time to build capability and reach a maturity level where an operational contract is a realistic prospect. Underestimating this (somewhat irreducible) timeline can represent a significant risk for early-stage AI providers. Building a business solely in defence represents an enormous financial challenge. We’ve seen several companies fall foul of this in the UK, with results including the winding down of operations, redundancies, and selling off business units. It’s important to walk into the defence sector with eyes open and the potential to compensate for long timelines to production revenue with additional lines of business where technology can be developed in tandem. The inescapable reality is that building repeatable products is hard and takes time, as does reaching operational deployment in defence.

Procurement and Process Challenges

Defence and AI are a somewhat uncomfortable partnership. The “move fast and break things” risk-seeking ethos of Silicon Valley startups does not naturally mesh with the deliberate and sometimes risk-averse attitudes of defence and national security. This naturally results in friction when blending development and procurement processes between the two sectors.

By way of example, the Technology Readiness Levels system of maturity is perfect for hardware systems with their significant cost and work required for iteration and system testing in different environments. AI development is not the same. Moving away from the hardware domain makes it easier to iterate quickly and rapidly improve and test capability. In some scenarios, new AI systems are conceived, built, tested, deployed and initially validated in a deployed environment in under a month. Indeed, the complexity of many AI use cases necessitates early-stage real-world testing to avoid generating increasingly complex simulation and testing environments. Acknowledging these differences on both sides and compromising on process and timeframes is important in accelerating results.

There are also challenges around ongoing AI assurance, updates, and management. The exact shape, ownership, and process to achieve production impact is not completely understood, which generates many questions at early stages as stakeholders attempt to forecast later requirements. Generalised approaches to defining what this will look like are likely vague at best and actively harmful to AI deployment at worst. The unknown nuances of how and who will manage and enable the operational use of AI is a question which is still being explored, and providers must acknowledge where there are gaps in their understanding. These questions can and will be answered, but through experience and experimentation, rather than top-down, non-specific policies that attempt to lock down solution shape before it is practical.

Three Improvements to Operationalise AI Effectively

1. People

The kinds of problems that AI can (and should) be used to solve are immensely complex, with large numbers of stakeholders and users, complicated and nuanced domains, and oftentimes existing systems already solving the problem. Combine this with the complexity of AI, and you have an immensely convoluted combination far beyond the comprehension and abilities of a single individual or even an organisation.

On this basis, it rapidly becomes clear that solving these kinds of problems to the best degree possible is not feasible without collaboration from a large number of people with a diverse set of experiences and backgrounds. We believe that a partnership approach is the best way to bring to bear the expertise required to reach a performant solution. These individuals must cover AI techniques, of course, which are a broad church in their own right. However, they must also include key decision makers and budget holders, users and design professionals, assurance, governance, and certification stakeholders, and experts in existing software and hardware systems.

Unifying such a large group is no mean feat, but in our experience, doing so early is the best way to get an accurate representation of a problem which captures all key performance considerations, from scientific performance to usability, interface to integration, and deployment information, certification, and assurance approaches. An early understanding of this multifaceted picture means systems can be optimised for the true problem and context rather than exploring rabbit holes or becoming misaligned with operational priorities and realities.

2. Problems

Given the right people are already in the room, AI must be used to solve the right problems. Namely, those which are a priority for operational users and can, therefore, justify the development expense. Operational priorities are also a must if we successfully capture the attention and support of a large group of stakeholders. That said, there is a balance to be struck. AI capability mustn't be exaggerated, and it’s important to avoid overly risk-prone concepts such as automating critical operations where human intuition is still important.

Maintaining this alignment and balance of risk necessitates frequent user feedback. It’s easier to engage these users when the problem in question is of operational relevance, and the potential benefit is clear. Feedback must, of course, be sought in the context of improving operational impact rather than focusing on the exploration of new technology.

3. Process

Feedback is only useful if it can be acted upon. This brings us to an examination of development and procurement processes. It’s vital to accept that complex problems are impossible to scope fully at an early stage and that the goal of seeking feedback is to adjust assumptions, priorities, and scope. This is to ensure that systems remain targeted against what matters most operationally.

Enabling this flexibility of scope means that procurement processes must acknowledge the requirements and introduce an increased level of agility. Many approaches exist to achieve this, including smaller, shorter, phased contracts with reused terms or call-off agreements with packages of work agreed over time. The exact solution doesn’t matter, but an ethos of flexibility and “learning by doing” is hugely valuable. It is no secret that organisations that can deal with and adapt to uncertainty are often the most successful.

Thinking Differently to Cross the Deployment Gap

There are numerous challenges to realising the true potential of AI in defence and national security, and most of them are not, in fact, technical. The difficulty lies instead in the supporting apparatus enabling the development and procurement of any technical solution, exacerbated by the complex and nuanced nature of AI systems’ capabilities, requirements, and the number of stakeholders involved.

Key tenets of software development like flexibility, transparency, and iteration have demonstrated their value in hard-won lessons in the past 25 years. They represent significant potential for creating the same value in defence and national security. The challenge is reconciling these ways of working with an industry that, for a good reason, feels uncomfortable with the “move fast and break things” attitude, open sharing of information, and working with uncertainty. To bridge this gap, the AI industry and defence as a customer will have to work in ways that are not their norm.

Enjoyed this blog? Read our piece on AI and Sonar: Cutting Through the Noise

When used by malicious actors or without considerations for transparency and responsibility, AI poses significant risks. Mind Foundry is working with...

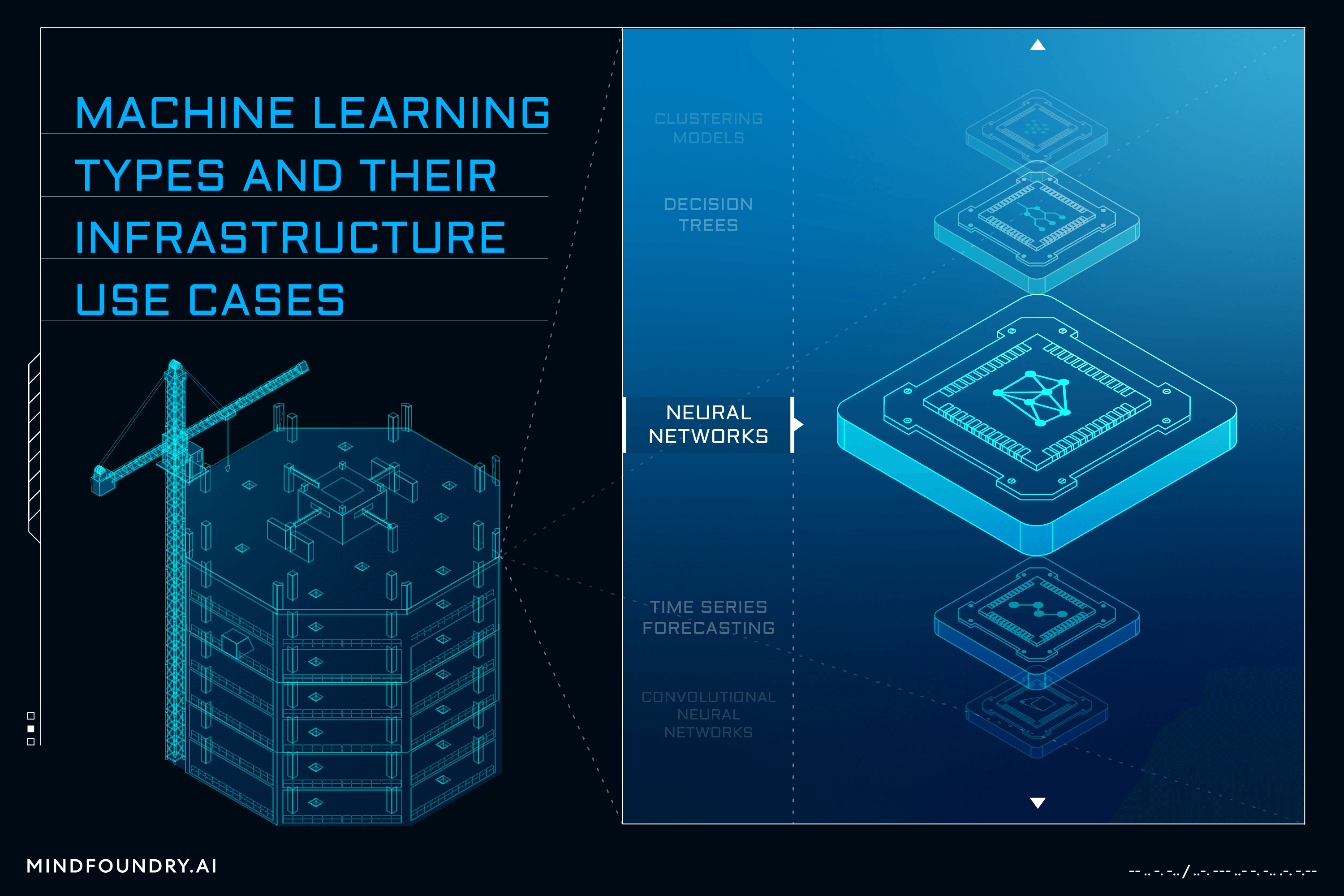

AI and Machine learning is a complex field with numerous models and varied techniques. Understanding these different types and the problems that each...

While the technical aspects of an AI system are important in Defence and National Security, understanding and addressing AI business considerations...